Token Bucket Algorithm Explained

why rate limiting matters

every API or system has limits. without some control, a single user could flood your server with requests and take it down. rate limiting solves this by controlling how many requests pass through over time.

there are many algorithms for this—fixed window, sliding window, leaky bucket, and token bucket. among them, token bucket is one of the most popular because it balances steady traffic control with short bursts of requests.

what is the token bucket algorithm?

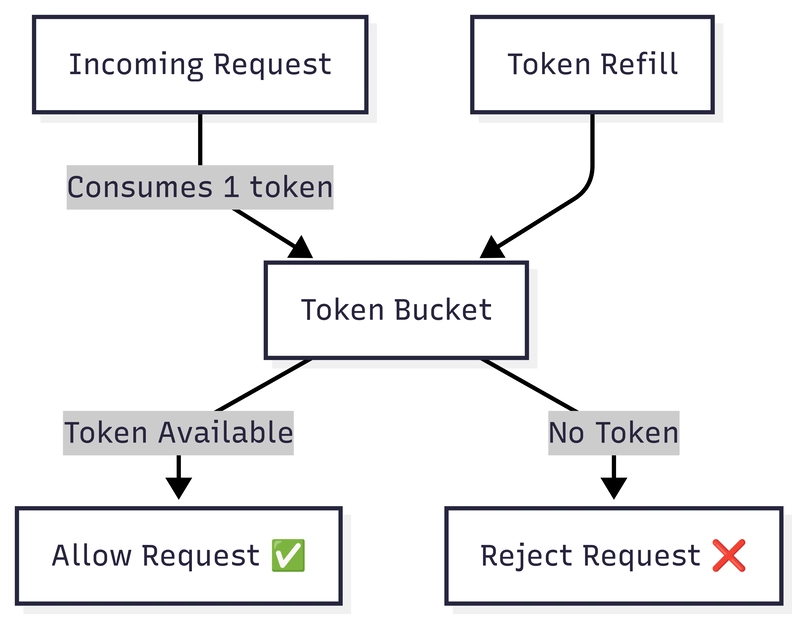

imagine a bucket that holds tokens:

- tokens are added at a fixed rate (e.g. 5 per second).

- each request must “spend” one token to pass.

- if tokens are available → request is allowed.

- if the bucket is empty → request is rejected (or delayed).

- the bucket has a maximum size, so tokens don’t grow forever.

this allows short bursts (if tokens have been saved up) while still enforcing an average rate over time.

diagram of token bucket

here’s a simple flow of how it works:

and a refill view:

the math behind it

let’s define:

-

C= bucket capacity -

R= refill rate (tokens per second) -

T= elapsed time since last refill

then the number of tokens at any moment is:

tokens = min(C, tokens + R * T)

when a request comes in:

- if

tokens > 0→ allow andtokens -= 1 - else → reject

example run

- capacity = 10

- refill rate = 1 token/sec

timeline:

- time 0s → bucket full (10 tokens)

- user sends 5 requests instantly → 5 tokens left

- time 5s later → 5 tokens refilled, bucket = 10 again

- user sends 15 requests → only 10 succeed, 5 rejected

this shows how token bucket allows bursts up to capacity, but keeps the long-term rate limited.

pros and cons

pros

- allows bursts but enforces average rate

- smooth traffic shaping

- widely used in networking & APIs

cons

- slightly more complex than fixed window counter

- needs careful sync in distributed systems

code example (javascript)

class TokenBucket {

constructor(capacity, refillRate) {

this.capacity = capacity; // max tokens

this.tokens = capacity; // current tokens

this.refillRate = refillRate; // tokens per second

this.lastRefill = Date.now();

}

refill() {

const now = Date.now();

const elapsed = (now - this.lastRefill) / 1000;

const added = Math.floor(elapsed * this.refillRate);

if (added > 0) {

this.tokens = Math.min(this.capacity, this.tokens + added);

this.lastRefill = now;

}

}

allowRequest() {

this.refill();

if (this.tokens > 0) {

this.tokens -= 1;

return true;

}

return false;

}

}

// usage

const bucket = new TokenBucket(10, 1); // 10 tokens, refill 1/sec

setInterval(() => {

console.log("request allowed?", bucket.allowRequest());

}, 200);

real-world use cases

- API gateways (AWS, Nginx, Envoy use variations of this)

- network routers (token bucket for traffic shaping)

- message queues (ensuring consumers don’t get overloaded)

comparing with other methods

- Fixed Window Counter → simpler, but can allow unfair bursts at the edges.

- Leaky Bucket → enforces a steady rate, but less flexible with bursts.

- Token Bucket → combines steady rate with short bursts → best balance for APIs.

conclusion

the token bucket algorithm is one of the most practical ways to implement rate limiting. it’s simple enough to code, supports burst traffic, and ensures fair usage over time.

if you’re building an API or distributed system, token bucket should be one of your go-to options.