🚀 The Complete Guide to Prompt Engineering: From Zero-Shot to AI Agents

A comprehensive guide based on Google’s latest prompt engineering whitepaper

Introduction

Prompt engineering has evolved from a simple “art of asking questions” to a fundamental skill for anyone working with Large Language Models (LLMs). Whether you’re a developer, content creator, or just curious about AI, mastering prompt engineering can dramatically improve your AI interactions and unlock the full potential of models like GPT, Gemini, and Claude.

What is Prompt Engineering?

Think of prompt engineering as setting up an LLM for success. Since LLMs work as prediction engines, they generate the next token based on the input you provide. The quality of your prompt directly impacts the quality of the output

Bad Prompt: "Write about AI"

Good Prompt: "Write a 500-word technical blog post explaining transformer architecture to junior developers, focusing on the attention mechanism with practical Python examples"

Core Prompting Techniques

1. Zero-Shot Prompting

The simplest approach – just describe what you want:

Classify this movie review as POSITIVE, NEUTRAL, or NEGATIVE:

"Her is a disturbing study revealing the direction humanity is headed if AI is allowed to keep evolving, unchecked. I wish there were more movies like this masterpiece."

2. Few-Shot Prompting

Provide examples to guide the model

Parse pizza orders to JSON:

EXAMPLE: "I want a small pizza with cheese and pepperoni"

JSON: {"size": "small", "ingredients": ["cheese", "pepperoni"]}

EXAMPLE: "Large pizza, half margherita, half pepperoni"

JSON: {"size": "large", "type": "half-half", "ingredients": [["cheese", "tomato sauce", "basil"], ["cheese", "pepperoni"]]}

Now parse: "Medium pizza with mushrooms and olives"

JSON:

3. System & Role Prompting

Assign specific roles or contexts

You are a senior software architect. Review this React component for performance issues and suggest optimizations. Focus on re-rendering problems and memory leaks.

[Component code here...]

4. Chain of Thought (CoT)

For complex reasoning, ask the model to think step-by-step

When I was 3 years old, my partner was 3 times my age. Now I am 20 years old. How old is my partner? Let's think step by step.

Model Output:

1. When I was 3, my partner was 3 × 3 = 9 years old

2. Age difference = 9 - 3 = 6 years

3. Now I'm 20, so my partner is 20 + 6 = 26 years old

Advanced Techniques

Self-Consistency

Run the same CoT prompt multiple times and take the majority vote for better accuracy

Tree of Thoughts (ToT)

Explore multiple reasoning paths simultaneously, like branches on a tree

ReAct (Reason & Act)

Combine reasoning with actions, enabling the model to use external tools

# Example: Building an AI agent that can search and calculate

from langchain.agents import load_tools, initialize_agent

from langchain.llms import VertexAI

llm = VertexAI(temperature=0.1)

tools = load_tools(["serpapi"], llm=llm)

agent = initialize_agent(tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION)

result = agent.run("How many children do Metallica band members have in total?")

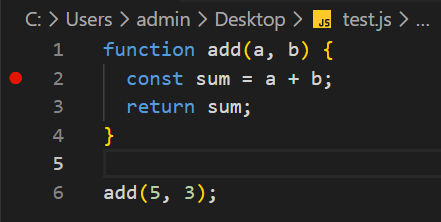

Prompting for Code

LLMs excel at coding tasks. Here are effective patterns

Code Generation

Write a Python function that:

1. Takes a folder path as input

2. Renames all files by prepending "draft_"

3. Handles errors gracefully

4. Returns a list of renamed files

Code Explanation

Explain this Python code line by line:

[paste your code]

Focus on the algorithm and potential edge cases.

Code Translation

Convert this Bash script to Python, maintaining the same functionality:

[bash code]

Best Practices

1. Provide Clear Examples

Examples are your most powerful tool. Use diverse, high-quality examples that cover edge cases.

2. Design with Simplicity

❌ "I'm visiting NYC with two 3-year-olds and want recommendations"

✅ "Act as a family travel guide. List 5 kid-friendly activities in Manhattan for toddlers (age 3), with practical tips for each location."

3. Be Specific About Output

❌ "Write about video games"

✅ "Generate a 3-paragraph blog post about the top 5 gaming consoles of 2023. Include sales figures and target audience for each console. Write in conversational style for tech enthusiasts."

4. Use Instructions Over Constraints

❌ "Don't write about pricing or technical specs"

✅ "Focus only on user experience and design aesthetics"

5. Experiment with JSON Output

For structured data, JSON reduces hallucinations and provides consistent formatting:

Return your analysis as JSON with this structure:

{

"sentiment": "positive|negative|neutral",

"confidence": 0.95,

"key_themes": ["theme1", "theme2"],

"summary": "brief summary"

}

Common Pitfalls to Avoid

- The Repetition Loop Bug: Caused by inappropriate temperature settings

- Ambiguous Instructions: Be explicit about format, length, and style

- Ignoring Context Windows: Longer prompts ≠ better prompts

- Not Testing Edge Cases: Always validate with unusual inputs

📝 Conclusion

Prompt engineering is both an art and a science. Start with these fundamentals:

- Master the basics: Zero-shot → Few-shot → CoT

- Configure properly: Understand temperature and sampling

- Iterate systematically: Document attempts and learn from failures

- Use structured outputs: JSON for consistency

- Test extensively: Edge cases reveal prompt weaknesses

The field is rapidly evolving, with new techniques like automatic prompt engineering and multimodal prompting on the horizon. Stay curious, keep experimenting, and remember: the best prompt is the one that consistently gives you the results you need.

What’s your experience with prompt engineering? Share your best prompting tips in the comments! 👇

source