The AI Speed Illusion

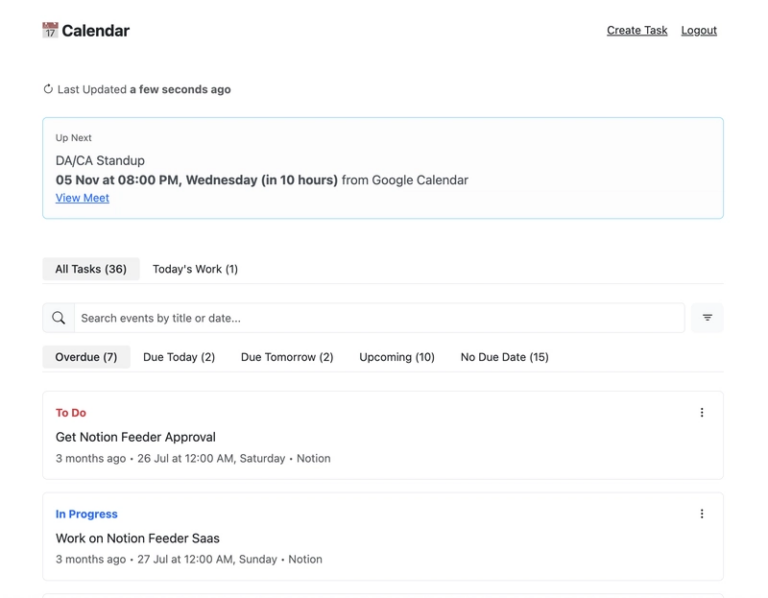

Why Writing Code Faster With AI May Actually Slow You Down

You know that rush when your AI assistant spits out 200 lines of “perfect” code before you even finish your latte?

Feels like unlocking a cheat code in VS Code. You’re Neo, the AI is Morpheus, and together you’re rewriting the Matrix until the build fails, CI cries, and prod starts acting haunted.

That’s the real plot twist of 2025: AI made us faster, but it also made us slower where it actually counts.

The new paradox of dev life is that velocity without control just creates traffic jams bigger PRs, longer reviews, security debts, and cognitive fatigue. The industry calls it the AI velocity paradox; I call it “deploying faster into your own mess.”

TL;DR

- AI speeds up code, not delivery.

- Testing, security, and review can’t keep up.

- Dev brains are hitting cognitive bottlenecks.

- The real fix isn’t “more AI” it’s smarter pipelines and habits.

- Let’s unpack how we got here (and how not to burn out).

What’s changed: code speed up

AI didn’t just nudge the throttle it slammed it.

Developers are now generating more code than ever, and the stats back it up: 63% of teams ship to production more often since adopting AI assistants, according to Harness’ 2025 report. Tools like Copilot, Cursor, CodeWhisperer, and Replit Ghostwriter have turned editors into idea cannons. You think in English, it writes in Python.

It’s easy to see the appeal. No more boilerplate. No more Stack Overflow safari.

Type “build me a REST API” and watch the scaffolding assemble like LEGO. Product managers cheer, Slack lights up with “🚀,” and for a moment it feels like we’re entering the post-coding era.

But every time the faucet opens wider, the pipes downstream start groaning.

Teams aren’t just producing more code they’re producing more half-tested, AI-blended, multi-style code. And with eight to ten AI tools now floating around in a single workflow, context-switching hits harder than caffeine withdrawal.

There’s also the dopamine factor. The Cerbos.dev blog calls it “the productivity illusion”: instant output feels like progress even when you’re generating rework. It’s that moment when your AI nails a regex and you feel like a 10x dev until QA drops the “it fails on edge cases” bomb.

In short: we opened the speed gate, but forgot to reinforce the bridge.

We’re typing faster than ever… but delivery is starting to wobble.

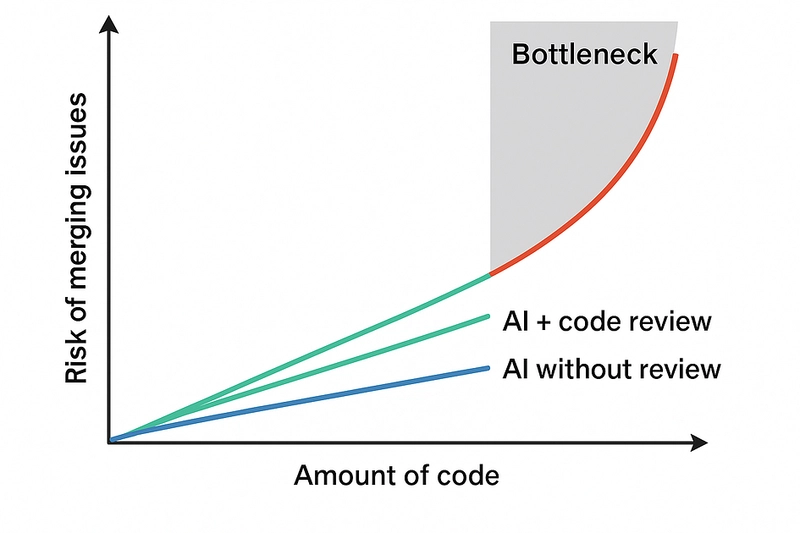

The bottleneck surprise the AI velocity paradox

Here’s the twist nobody expected: speeding up coding didn’t actually speed up software.

We’re writing more, committing more, even merging more but shipping? That’s still crawling through molasses.

Harness calls it the AI Velocity Paradox: dev output rockets up, yet release cycles stall. In their 2025 survey, 45% of AI-assisted deployments triggered new issues, and 72% of orgs admitted at least one production incident caused by AI-generated code.

So what’s happening?

It’s like we installed turbo engines on a car that still has drum brakes.

The code flies out of the repo straight into an unprepared CI/CD system, a burned-out QA team, and a terrified security pipeline.

The actual bottleneck just moved downstream.

A buddy of mine learned this the hard way. After his team adopted an AI pair-programmer, their pull requests tripled in volume.

“Awesome,” management said, “we’re crushing velocity targets.”

Except review times quadrupled because every diff was bloated, inconsistent, and touched ten files at once. Within a sprint, their PR queue looked like the DMV line at closing time.

CircleCI summed it up best:

“Writing code has never been the bottleneck. Delivering code safely has.” (CircleCI Blog)

Faster inputs ≠ faster outputs.

You’re pouring water into a funnel whose spout hasn’t grown. The pressure builds until something bursts usually your test suite or your sanity.

So yeah, AI’s great at acceleration.

But acceleration without traction? That’s just wheel spin.

Dev-brain side effect skills, velocity & vibe

Okay, time to talk about the part AI dashboards don’t measure: your brain.

Yeah, the squishy compiler between your ears that used to know how to regex-punch its way through anything is now outsourcing half its muscle memory to autocomplete.

Skill decay is real

When AI writes more of your code, you write less. Obvious, but sneaky.

After a few weeks of heavy Copilot use, I opened a blank file and froze like a deer in semicolons. Couldn’t remember the syntax for a simple iterator. Felt like I’d been speed-running tutorials instead of mastering the game.

That’s the quiet trade-off: less repetition → less recall → slower problem-solving when the assistant isn’t around.

Remember my“AI Killed My Coding Brain” post? Yeah that wasn’t melodrama. That was foreshadowing.

Review fatigue hits harder

Reading AI-generated PRs is like reading your own handwriting after a night of bad decisions. You kinda understand it, but not really.

These diffs are bigger, touch more modules, and include logic the human author (you) doesn’t fully grok. Result? Longer review sessions, more mental load, and that “should I even approve this?” guilt that lives rent-free in every dev’s head.

The velocity illusion

Here’s the kicker AI feels faster, even when it’s not.

Cerbos ran an experiment: devs using AI assistants were 19% slower than those coding manually but believed they were 25% faster. (Cerbos.dev)

Dopamine is a hell of a drug.

Mini-story time

I let my AI scaffold a front-end feature last month. It looked flawless until I hit deploy. Turns out it imported a deprecated library that broke auth in production. Spent an hour debugging a line I didn’t write.

That’s when it hit me: I’m not coding faster I’m just fixing faster.

Risk surface & security debt

Let’s talk about the dark side of that “AI-writes-code-while-I-sip-coffee” life: the growing pile of invisible risks you’re shipping right along with it.

Vulnerabilities on autopilot

AI doesn’t just copy patterns it replicates bad ones.

A 2025 study showed a 37.6 % rise in critical vulnerabilities after multiple iterations of AI-generated code. (arXiv)

Another from Cobalt.io found that AI commits had significantly more privilege-escalation and hard-coded-secret issues.

Why? Because your friendly neighborhood LLM doesn’t “understand” risk it just predicts tokens. So when insecure code exists in its training data… congratulations, it’s now in your repo too.

Cloud chaos & tool sprawl

Faster coding also means faster spending.

That same Harness report said 70 % of orgs fear AI-driven cost overruns from ballooning dependencies, redundant services, and “test environments that never die.”

Every auto-generated microservice is a new AWS bill waiting to happen.

Multiply that by ten AI tools in your workflow, and suddenly you’ve built a distributed headache with 17 different auth tokens.

Compliance lag & review bottlenecks

81 % of security teams admit their review pipelines can’t keep up with AI-powered dev velocity. (IT Pro)

So code ships fast, but audit trails, pen-tests, and approvals crawl.

You’re not accelerating you’re just moving the waiting room from “dev” to “secops.”

The skyscraper analogy

Imagine building a 10-story tower in record time… but using the same old elevators and forgetting fire exits. That’s what happens when AI accelerates code but not the infrastructure around it.

Sure, it looks shiny right up until the first fire drill.

Speed is fun.

Security debt isn’t.

And every unchecked AI commit is interest you’ll pay later in breaches, bugs, or burnout.

What to do mindset, pipeline, tooling

Alright, enough doomscrolling let’s talk fixes.

Because “AI broke my delivery pipeline” isn’t exactly the badge of honor you want on your LinkedIn.

Mindset: pilot > autopilot

Rule #1: AI is your assistant, not your replacement.

It’s Clippy 2.0 with better syntax treat it that way.

Keep your fundamentals sharp. Write small tools by hand, review your own output, and refactor without the crutch once a week.

Think of it like leg day for your dev brain: skip it too often, and suddenly you can’t lift a simple for-loop.

Questions to ask yourself before you ship:

- “Do I understand why this works?”

- “Can I debug it without the model?”

- “Would I trust this in prod on a Friday?” If any answer is “uhh…,” maybe hit pause.

Pipeline: upgrade the whole flow

Your keyboard got faster your CI/CD needs to catch up.

Automate what humans suck at: secret scanning, dependency checking, test coverage.

Add guardrails: fail PRs that exceed complexity limits, enforce linting on AI-generated files, tag those commits for later review.

If review time or bug reopen rate spikes, throttle AI usage until you clear backlog.

scale testing, not typing.

CircleCI says only 6 % of orgs have fully automated delivery. (source)

That’s the real opportunity let the bots handle the boring after you’ve handled the creative.

Tooling & habits: tactical dev moves

- Use smaller prompts: generate one function, not an entire module.

- Add a “human-check” branch before merging AI code.

- Keep dashboards tracking AI vs. human bug rates.

- Schedule “AI-off” coding sprints to rebuild muscle memory.

- Reward code clarity, not just commit count.

At a startup I worked with, we tagged every AI-generated commit. Two months later, those modules had 40 % more bug reopenings.

We paused generation for a sprint, held review clinics, and the queue time dropped in half.

Velocity returned but this time, it was real.

Conclusion faster isn’t freer

Here’s the uncomfortable truth: AI didn’t magically make us better engineers it just made our mistakes happen faster.

Every dev I know has that moment where they look at an AI-generated file and think,

“Wait… did I actually write this, or just approve it?”

That’s not progress that’s drift.

AI coding tools are incredible accelerators, but they’re also amplifiers.

If your process, tests, or judgment are weak, AI will multiply that weakness tenfold.

If your team treats it like an autopilot, you’ll just reach the crash site sooner.

The next generation of devs who win won’t be the ones typing fastest they’ll be the ones who balance velocity with verification.

The ones who can step back, question outputs, and keep the craft intact.

You upgraded your keyboard.

Now upgrade your pipeline, your judgment, and your patience.

Your turn: what’s the worst “AI-generated disaster” you’ve shipped (or narrowly avoided)? Drop it in the comments let’s compare scars.

Helpful resources

If you want to dig deeper or fact-check what we just unpacked:

-

Harness State of AI in Software Engineering (2025) the report that coined the AI Velocity Paradox. prnewswire.com

-

CircleCI Blog: The real reason your AI initiatives are failing. circleci.com/blog/ai-delivery-bottleneck

-

Cobalt Security Study: Velocity vs Vulnerability why AI-generated code demands human-led security. cobalt.io/blog

-

arXiv Paper: Security Degradation in Iterative AI Code Generation (2025). arxiv.org/abs/2506.11022

-

Reddit & HN threads: real dev takes on skill decay and review fatigue. r/programming