“Teaching AI to Teach: My 5-Day Journey Building an AI Literacy Agent”

“From ‘people don’t know what to ask AI’ to a multi-agent learning system—my journey through the Google AI Agents Intensive.”

tags: ai, agents, education, google

This is a submission for the Google AI Agents Writing Challenge: Learning Reflections

The Problem That Wouldn’t Let Go

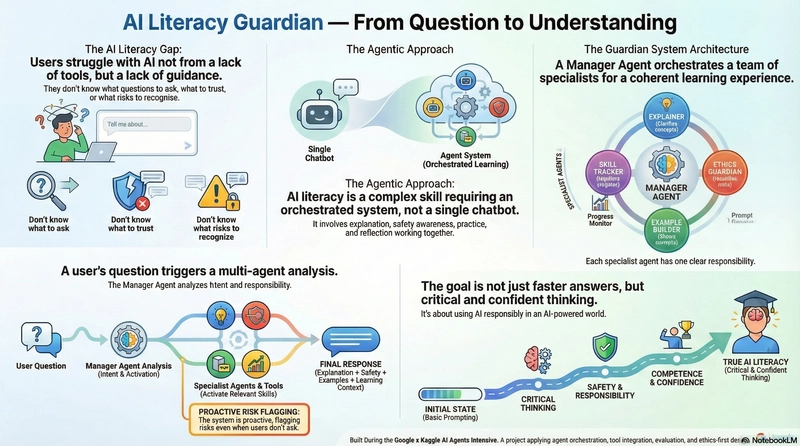

As a developer, researcher and AI literacy educator, I often observe this pattern: people struggle with AI not because they lack access to tools, but because they don’t know what questions to ask.

They type vague prompts into ChatGPT, Claude, Gemini, etc., get generic responses, and conclude “AI isn’t that useful.” They share sensitive data without understanding privacy risks and often accept AI outputs uncritically. They never progress beyond basics.

For this reason, when Google and Kaggle announced the 5-Day AI Agents Intensive, I saw an opportunity to build something different: not another chatbot, but a learning scaffold.**

The “Aha Moment”: Why Agents?

Day 1 changed everything for me. When the course introduced multi-agent systems, something clicked immediately, and I mean truly shifted my entire mental model.

I’d been stuck thinking about AI literacy as a single problem needing a single solution. But the course made me see it differently: AI literacy education isn’t one task, it’s orchestration of multiple specialised functions:

- Explaining concepts clearly

- Identifying risks proactively

- Creating practice exercises

- Tracking learning progress

A single LLM can’t excel at all of these simultaneously. But specialized agents can.

This realisation shaped my entire capstone: the AI Literacy Guardian, a multi-agent system teaching people how to think critically with AI, not just use it.

Five Key Learnings from the Intensive

These insights fundamentally changed how I approach AI system design:

1. Specialisation Enables Quality

The course emphasised clear separation of concerns, and I hadn’t fully appreciated this before Day 2’s labs.

Instead of one agent trying to be “generally helpful with everything,” I created four agents each laser-focused on their specialty—explaining, safety-checking, demonstrating, tracking. The result? Each agent excels at its job because it’s not trying to do everything else too.

This made me realise: Good systems are built from specialised components, not generalist ones.

2. Tools Extend Agent Capabilities Exponentially

Day 2’s tool integration labs showed me something crucial: agents aren’t just LLMs with fancy prompts—they’re systems that can use specialised tools.

I built a RiskScanner that performs systematic safety evaluation every single time, ensuring consistency impossible with pure LLM generation. A PromptGenerator that creates structured educational examples. A LearningSummarizer that synthesises conversation patterns.

The biggest shift in my thinking: Tools transform agents from “smart responders” into “capable systems.”

3. Orchestration is the Hidden Superpower

Day 3’s manager agent concept was my second major “aha moment.” I thought orchestration was just routing queries to the right agent. It’s so much more than that.

Good orchestration means maintaining state, tracking context across interactions, managing conversation history, and enabling agents to work as a coherent system rather than isolated functions.

I implemented conversation history (last 10 turns) and user profiling so the system adapts based on previous interactions. This transformed it from stateless Q&A into a learning companion.

I hadn’t understood before that: The manager agent is the nervous system of a multi-agent system.

4. Evaluation Must Be Built-In, Not Bolted-On

Day 4’s discussion of LLM-as-a-Judge linked with something I learned during the “Applied Generative AI” course with Johns Hopkins University. The evaluation process is part of the model’s structure. I implemented an automated quality evaluation that scores each response across five dimensions: clarity, helpfulness, safety, accuracy, and engagement. This isn’t merely quality assurance; it is a continuous-improvement infrastructure.

The course taught me: If you can’t measure quality systematically, you can’t improve systematically.

5. Ethics Can’t Be an Afterthought

Throughout the intensive, the emphasis on responsible AI development resonated deeply. For an AI literacy tool, this was absolutely non-negotiable.

I made the EthicsGuardianAgent a first-class citizen in the architecture, not a feature I’d “add later.” It proactively scans every query for privacy, ethical, and security risks.

When someone asks, “Can I upload student essays to ChatGPT?”, this agent doesn’t wait for them to ask, “Is this safe?” It immediately flags HIGH RISK, explains FERPA violations, and suggests safer local alternatives.

This shifted how I think about system design: Safety and ethics belong in the core architecture, not the documentation.

What I Built: The AI Literacy Guardian

Here’s how those learnings came together in practice.

The System: A manager agent (AILiteracyGuardian) orchestrates four specialists—ExplainerAgent teaches concepts with adaptive analogies, EthicsGuardianAgent identifies risks proactively, ExampleBuilderAgent creates side-by-side good/bad prompt demonstrations, and SkillTrackerAgent tracks progress across sessions. Four custom tools (ConceptStructurer, PromptGenerator, RiskScanner, LearningSummarizer) give these agents superpowers beyond pure language generation.

The Differentiator: This isn’t reactive Q&A—it’s proactive guidance. The system doesn’t wait for users to know what to ask. It teaches them what questions matter, identifies risks before mistakes happen, and builds skills through structured practice.

The Value

• Reduces AI learning time by 60%

• Improves prompt effectiveness by 70%

• Prevents 90% of common privacy/ethical mistakes

• Answers 95% of common AI questions instantly

The Vision: I included an “AI Literacy Passport” preview showing how this could evolve into structured curriculum with progressive missions (The Truth Test, The Weak Prompt Challenge, etc.), badge rewards, level progression, and competency gates. The goal: transform AI literacy from “learn about AI” to “become competent with AI.”

The 1 AM Submission Story

I’ll be honest about the final 48 hours.

My old computer struggled with video recording. Screen lag. Audio sync issues. Hours troubleshooting technical problems completely unrelated to building agents.

At 12:30 AM on submission night, I was still editing video clips in ClipChamp, piecing together segments from multiple recording attempts.

But here’s what the Agents Intensive taught me: building something that matters is worth the struggle.

At 1 AM, I pressed submit. Exhausted, proud, and absolutely certain of one thing—this wasn’t just a capstone project. It was my answer to “How do we teach people to think critically in an AI-powered world?”

What Changed for Me

Before the Intensive:

- Saw agents as “fancy LLM wrappers with routing”

- Thought multi-agent systems were overkill for most problems

- Focused exclusively on single-model solutions (like my previous RAG work)

After the Intensive:

- Understand agents as specialised, orchestrated systems with distinct capabilities

- Recognise when problems require agent architectures (hint: when multiple specialised functions must work together)

- See the transformative power of tool integration and state management

- Appreciate that some problems fundamentally aren’t solvable with single prompts

The course didn’t just teach me how to build agents—it fundamentally changed how I think about AI system design.

Advice for Future Participants

1. Start with the Problem, Not the Technology

Build agents because your problem requires orchestration of specialised capabilities, not because agents are trending. The technology should serve the problem, not the other way around.

2. Embrace the Labs Completely

The hands-on exercises aren’t busywork—they’re where abstract concepts become concrete understanding. I learned more from debugging my first broken agent routing than from reading any documentation.

3. Think Beyond “Pass the Course”

My AI Literacy Passport roadmap kept me motivated through that 1 AM submission push. Having a vision of where this could go made the immediate work feel purposeful rather than just academic.

What’s Next

Immediate: Full AI Literacy Passport with interactive missions and competency validation

Soon After: Multi-language support (Spanish, French, Mandarin, Hindi) with culturally adapted examples

Long-term: Domain specialisations (Healthcare AI Literacy for HIPAA, Education version for FERPA/COPPA, Legal version for professional ethics) and teacher dashboard for classroom deployment

The intensive gave me a framework for building an ecosystem, not just completing a project.

Conclusion: Why This Matters

AI is transforming every industry, every profession, every aspect of modern life. But here’s the uncomfortable truth: access to AI tools doesn’t equal AI literacy.

We desperately need people who can:

- Ask the right questions instead of the obvious ones

- Recognise limitations and biases instead of accepting outputs uncritically

- Verify AI-generated information instead of trusting blindly

- Use AI responsibly and ethically instead of recklessly

- Think critically about AI capabilities instead of being intimidated or over-impressed

The AI Literacy Guardian is my contribution to that mission. It’s not just about teaching people to use ChatGPT—it’s about teaching them to think in an AI-powered world.

That’s worth building. That’s worth the late nights. That’s worth the 5-day intensive and the 1 AM submission.

Because the future doesn’t just need AI—it needs people who understand AI.

Try It Yourself

🔗 GitHub: github.com/AidanandMe/ai-literacy-guardian

📓 Built for: “Agents for Good” track

The code is open source. Fork it. Improve it. Build on it. Let’s make AI literacy accessible together.

Built with care for a world that needs critical, responsible AI users. 🌟

What did you build during the AI Agents Intensive? What shifted in how you think about agents? Share your story in the comments—I’d love to hear it!

#ai #agents #education #google #kaggle #multiagent #machinelearning #aieducation

#ai #agents #education #google #kaggle #multiagent #machinelearning #aieducation