My SaaS Infrastructure as a Solo Founder

I built and run UserJot completely solo. No team, no contractors, just me.

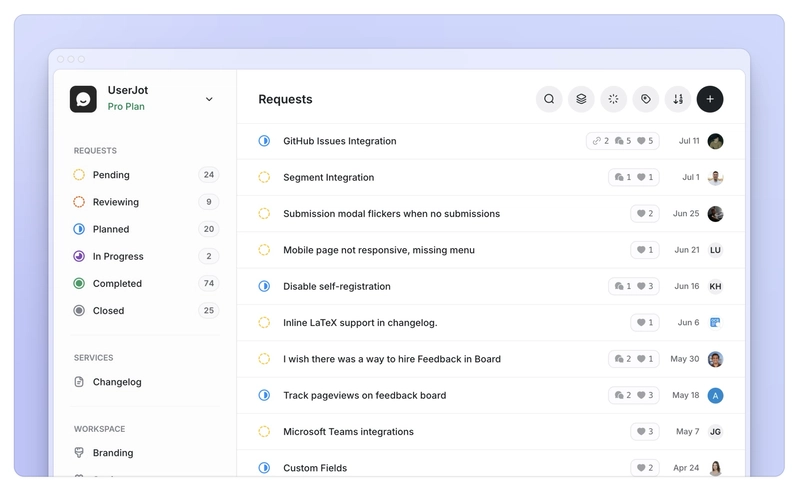

UserJot is a feedback, roadmap, and changelog tool for SaaS companies. It helps product teams collect user feedback, build public roadmaps, and keep users updated on what’s shipping. The infrastructure needs to handle significant traffic. In August alone we had 52,000 users hitting the platform, feedback widgets loading on customer sites, and thousands of background jobs processing.

Here’s the setup I’ve landed on after months of iteration.

The Setup

I have three frontends, all deployed on Cloudflare Workers:

- Astro for marketing pages, blog, and docs (mostly static with minimal JavaScript)

- TanStack Start for the dashboard (server-rendered, then becomes a SPA)

- TanStack Start for public feedback boards (same approach)

Why TanStack Start? It takes the good ideas from Remix and pushes them further. Next.js has gotten bloated with too many features and abstractions. TanStack Start is simpler and more predictable.

One backend cluster:

- Docker Swarm on Hetzner (US East)

- Node.js with TypeScript

- PostgreSQL

- Caddy for reverse proxy

That’s the entire infrastructure. Deployment happens through GitHub Actions that runs CI/CD and deploys to Docker Swarm. Simple, boring, works.

Why No Redis

PostgreSQL handles everything:

- Job queues using pg-boss

- Key-value storage in a JSONB table

- Pub/sub with LISTEN/NOTIFY

- Vector search with pg-vector for finding duplicate feedback

- Session storage

- Rate limiting

pg-boss has been rock solid for job queues. It’s processing thousands of jobs daily: email drip campaigns, notification triggers, data syncing. It has some limitations compared to dedicated queue systems since it’s built on PostgreSQL, but nothing that’s actually mattered in practice.

Could Redis do some of this better? Probably. But managing one database is simpler than managing two. When you’re solo, every additional service is something else that can break.

More importantly, every dependency directly affects your uptime. Two services means twice the things that can fail. Twice the things to monitor. Twice the things to back up. Twice the things to upgrade. Your availability is only as good as your weakest service. Every new service is another thing that can break at 2am.

Sure, at some point I might need Redis for caching. Or RabbitMQ for complex queue patterns. Or Kafka for event streaming. But that’s a very, very long way off. And honestly? I’m not looking forward to adding those dependencies.

Single Region, Global Users

The backend runs in one location: US East.

Three things make this work globally:

- Cloudflare Argo reduces latency by routing traffic through their network

- Optimistic updates in the UI make actions feel instant

- Prefetching loads data before users click

The frontends render on Cloudflare’s edge, so users get HTML from a nearby location while the data loads from the backend.

Serverless Where It Makes Sense

I use serverless for the frontends because they’re stateless. They just render HTML and proxy API calls. No persistent connections, no background jobs, no state to manage.

But the backend is a traditional server. You need connection pools for PostgreSQL. You need long-running processes for job queues. You need WebSocket connections for real-time features. Serverless isn’t great at these things.

This split works well:

- Frontends scale automatically on the edge

- Backend runs predictably on dedicated servers

The Trade-offs of Self-Hosting

I self-host PostgreSQL and the backend cluster. This takes more work than using managed services, but I get:

- Full control over PostgreSQL extensions and configuration

- Ability to run pg-boss, pg-vector, and other tools

- Predictable costs that don’t scale with usage

- No vendor lock-in

The downside: I handle backups, updates, and monitoring myself. If you value your time more than control, managed services might be better.

When This Makes Sense

This architecture works well when:

- You’re one person or a small team

- You want to own your infrastructure

- You’re comfortable with PostgreSQL and Docker

- You don’t need multi-region data replication

- Your features don’t require complex real-time sync

It probably doesn’t make sense if:

- You have a large team that needs managed services

- You need true multi-region presence

- You’re not comfortable managing servers

- You need specialized databases for specific features

What I’ve Learned

PostgreSQL can do more than you think. Job queues, caching, pub/sub. It handles all of these reasonably well. You might not need that Redis instance yet.

Stateless frontends simplify everything. When your edge workers just render and proxy, there’s no distributed state to worry about.

Single region is fine for most SaaS apps. With good caching and optimistic UI updates, users won’t notice if your server is far away.

Boring technology works. Docker Swarm isn’t as fancy as Kubernetes, but it’s simpler and does the job.

Over-provision your servers. VMs are cheap. Having 4x the capacity you need means you never have to think about scaling during a traffic spike. Peace of mind is worth the extra $50/month.

The Reality of Maintenance

The hardest part was the initial setup. Understanding how all the pieces fit together takes time and experience. But once it’s running? I spend maybe 2-3 hours per month on infrastructure.

Everything else is building features and talking to users.

Moving Forward

Will this scale forever? No, but it’ll scale way further than most people think.

People seriously underestimate how much a single PostgreSQL instance can handle. Modern hardware is insanely powerful. You can scale vertically to machines with hundreds of cores and terabytes of RAM. PostgreSQL can handle millions of queries per second on the right hardware.

This setup could easily handle hundreds of thousands of active users, maybe millions, depending on your usage patterns. It might be all I ever need.

For now, and probably for years to come, PostgreSQL and a simple architecture is enough. I’m shipping features, talking to users, and growing the business. The infrastructure just works.

If you’re curious about UserJot, check it out at UserJot. Always happy to chat about infrastructure decisions. Find me on Twitter/X.