I Spent 40 Hours Writing Tests That Broke in 2 Weeks — A Confession Story

TL;DR

Some stories are worth sharing, even if they start with humongous setbacks! Here goes my recent tech battle tale, and key learnings from it.

Prologue

Our team had just finished a major sprint refactor, and I, a product lead, volunteered to take charge of improving our end-to-end (E2E) test coverage.

The goal was to boost confidence in our regression suite and reduce bugs leaking into production. With the set of testing tools out there today to help with E2E testing, test case generation, test coverage, and test report creation, I had assumed it would be a cake walk!

What followed was a frustrating lesson, false positives, surprises, and how testing can either support or sabotage your development cycle, depending on how it’s approached.

By the way, this is not a post to blame or criticise testing — it’s quite the opposite. It’s a confession about what went wrong when I did everything “following the rules” and still watched 40 hours of test automation crumble in under two weeks.

The Intent and A Grand Start

We had a React-based web application with moderate unit test coverage but with very little E2E coverage. The few Cypress tests we had were outdated and flaky. Developers were increasingly hesitant to trust automation. My goal was to build a robust suite that would:

- Validate user flows across authentication, dashboard, and profile update features.

- Catch regressions before they reach QA or production.

- Run reliably as part of CI/CD.

- Serve as living documentation for critical workflows.

I was the man with the mission. So I updated our Cypress version, established folder structure conventions, and began building out test specs with clear naming, reusable helpers, tags, and test data.

I wrote 20+ scenarios. I created custom commands for login/logout, seeded test data via API, and even used data-test IDs to avoid weak selectors.

Everything worked like a charm, all passed locally. Next, Everything passed on CI, too. I was the proudest guy in the team meeting for a couple of weeks!

The Battle Part

The dream run started to slow down as I figured out that my hard work may have to be redone once again!

These were the reasons:

1. User Interfaces never settle down

The product team planned and launched a user interface refresh with a brand new UX. Some of the changes were not even that major, like a button changed from “Submit” to “Save Changes”, a few class names were refactored, and one modal now loads dynamically instead of rendering in the DOM.

So, what happened?

- My button click tests failed due to text changes.

- Modal tests couldn’t locate elements that now only appeared conditionally.

- Navigation flows were slightly different, causing tests to hang or timeout.

What did I learn?

UI-based E2E tests are fragile by nature. Even small, intentional changes break everything downstream(sigh).

2. Data dependencies could be costly

I depended on backend APIs to seed realistic test data. But these APIs changed silently, breaking assumptions about user roles and default field values.

What did I learn?

If your tests depend on mutable data or APIs you don’t have control over, your suite is only as stable as its weakest API contract.

3. Weak Selectors and Timing Hell

Even though I used data-test attributes, dynamic rendering introduced some race conditions. Animations and lazy-loaded modals caused selectors to intermittently fail.

What did I learn?

Intermittent test failures create trust issues. Once a test fails intermittently, no one believes it anymore, even when it’s right.

The Research Time

I didn’t want to throw away my work. So I went into research mode. I started exploring tools, techniques, and approaches to strengthen the suite.

Tools Evaluated

1. Playwright

- It is Faster and more reliable in async-heavy apps.

- Supports smart selectors and auto-waiting.

- Has the Cross-Browser Support Out of the Box.

- Provides powerful Context Isolations.

- Has a built-in Parallel Execution

Verdict: Loved it, but too late in the game to rewrite 20+ Cypress tests mid-sprint.

2. Nightwatch

- It is an all-in-one test framework.

- Has the Selenium and webdriver2 support.

- Supports simple syntax and Page Object Model.

- Provides Cross-Browser and mobile device testing.

- Got a strong community and long-term stability.

Verdict: Nightwatch is solid if you’re in a legacy Selenium ecosystem or need WebDriver compatibility. In my case, the dilemma of rewriting the existing test cases was still a big reason to stay away.

3. Bug0

- A fully managed AI-powered QA platform.

- A platform with zero setup needed, no codebase access needed, and works just by providing a staging URL.

- Excellent test compatibility matrix with rich test reporting capability.

- OOTB CI/CD, notification, and real-time reporting integrations.

- Self-Healing support that helps tests to automatically adapt when UI changes occur

Verdict: The Self-Healing feature was the turning point for me. Just when I was considering scrapping and rewriting the entire test suite, Bug0 came up with a lot of promise!

4. BrowserStack

- A popular DIY testing tool.

- You can test your apps on a massive grid of real browsers and OS combinations.

- Getting access to logs, video recordings, and screenshots makes it easy to debug.

- Support major frameworks like Sypress, Playwright, Selenium, Nightwatch

- You can plug seamlessly with CI/CD.

Verdict: BrowserStack is great when it comes to ensuring the real-world compatibility coverage without breaking head for your own infrastructure. But still, it requires manual set-up, maintenance, and the effort to write and update tests.

I didn’t stop my research with these three alone. I went ahead and looked into two more possibilities:

- Intercept network calls and return mock data

- Helped decouple tests from backend flakiness

Verdict: A true lifesaver. Tests became stable and deterministic.

6. Low-Code Platforms (Testim, Reflect.run)

- Great for quick UI test creation

- Non-technical folks could contribute

Verdict: Useful, but lacked control and flexibility for our edge cases.

The Final Selection

I’ll be honest here, I was sceptical. I’ve used “AI-powered” tools before, and most were just keyword detection with a fancy UI. But I found Bug0 different in three major ways:

1. Self-Healing Tests That Actually Worked

When a button label changed or a DOM structure was slightly refactored, Bug0’s test runner intelligently adapted using visual cues and context, not just selectors. I could see in the logs where it adjusted and why.

This alone reduced maintenance time by at least 60%.

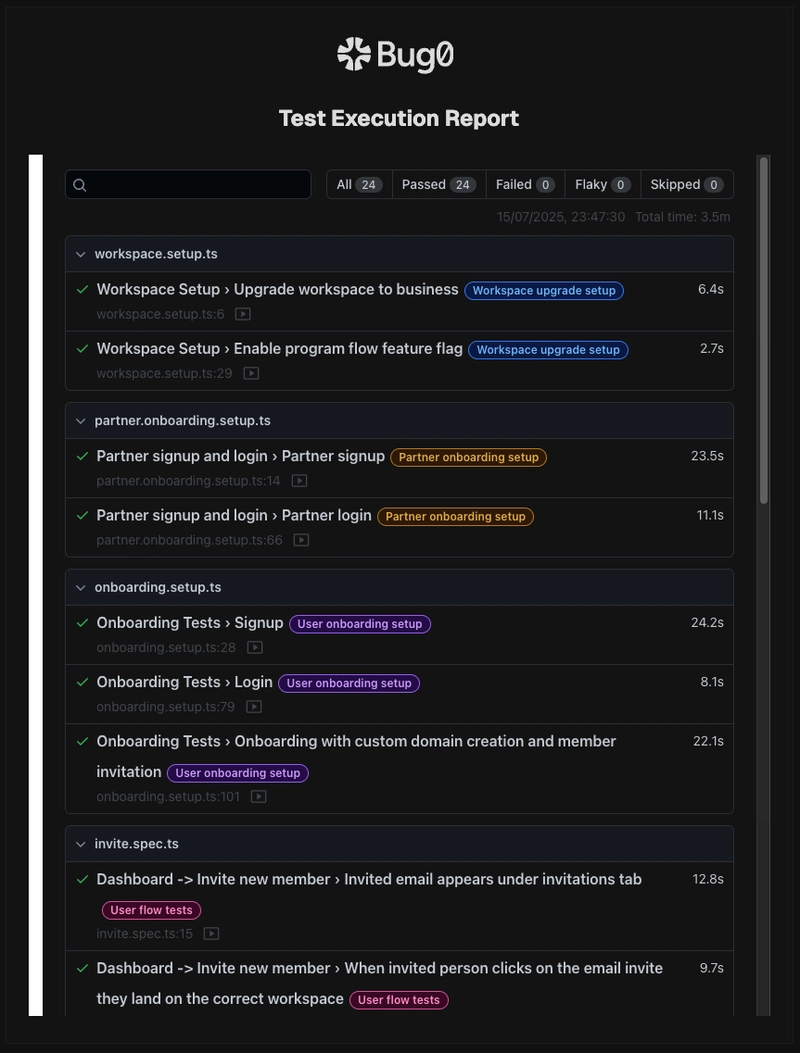

2. Dynamic Test Validation and Real-time Reporting

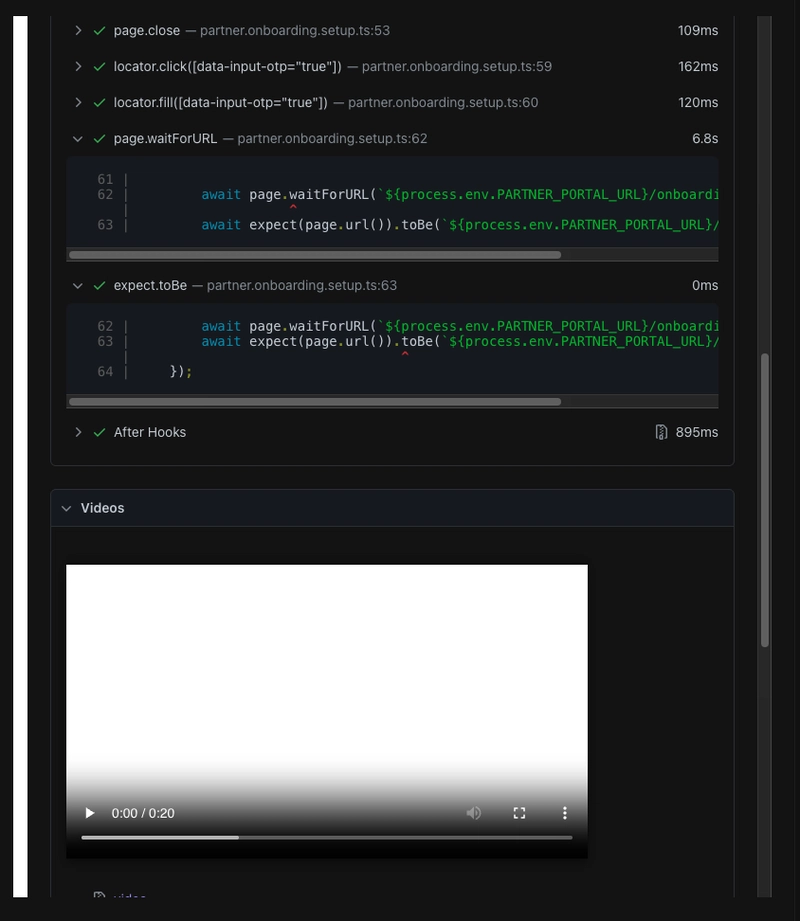

Bug0 allowed me to run tests against two app versions (before and after refactors) and generated real-time reports. The report contains checks with drill-down facilities:

On drilling down, I could get all the test status and also detailed video capturing the failures.

I didn’t have to manually figure out what UI or data changes broke my tests — it told me. This was a huge timesaver during sprints, where design tweaks were common.

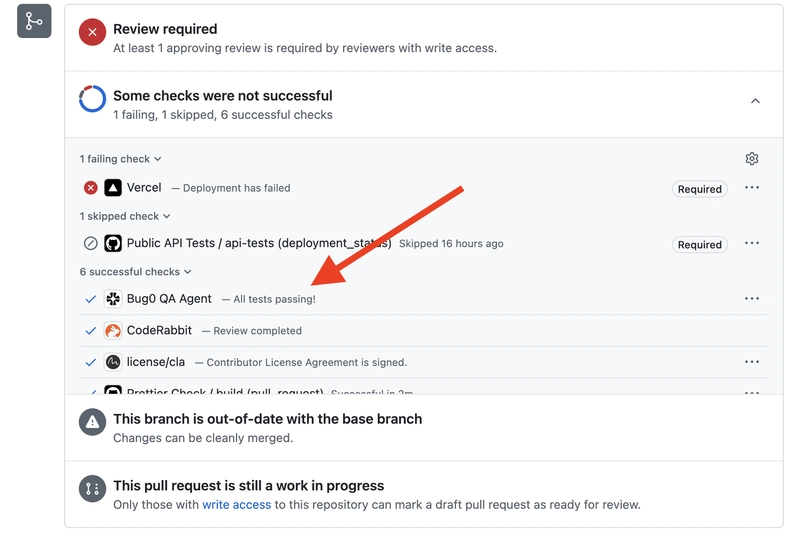

3.CI/CD Integration

The last, but not the least, is about the CI/CD integration and compatibility. I had the option to integrate a CI/CD pipeline with GitHub, GitLab, or Bitbucket. I had the requirement for GitHub, and it was a perfect marriage!

Once the test suite is ready, Bug0 plug it into the CI/CD workflow using their GitHub/GitLab/BitBucket App. The set-up was super fast(within a minute), triggered the test on every pull request, and ran silently in the background on every change.

My Overall Learning

Traditional Tools are Great, But…

With my research on evaluating tools, frameworks, and platforms, I found that most of the traditional DIY testing tools, like BrowserStack, Playwright, and Nightwatch, are great and solve real problems.

However, they are not easy to maintain in the long run. Your QA platform/tool selection should consider future maintenance costs, dedicated QA effort, and manual ways of managing test cases.

It’s been a decade since the world has moved towards automation from manual testing efforts. If you are wondering about the current trends, A 2025 Forrester study found that 55% of organizations already use AI in their testing workflows, with 70% of mature DevOps teams relying on AI-powered tools to maintain speed and coverage.

Yes! The agentic AI, human-in-the-loop QA, and continuous quality systems are not a dream or fairy tales. They are the “new reality” to make us much more productive and efficient.

Understanding Testing Layers

I am from a developer background. I had as limited knowledge about the testing layers as a QA might have about a complex design pattern(no offence!).

The choice you need to make about learning these layers and differences between them, so that your test strategies sound reliable:

- Unit Tests: For logic.

- Component Tests: For User Interfaces

- Minimal E2E Tests: Only the highest-value flows, stabilized via Bug0 + MSW.

Use decoupling:

- Replaced flaky backend setups with mocks.

- Used fixture versioning to avoid silent data shifts.

Ownership & Documentation

Even with an AI-powered platform that help you with a bunch of automation with test case writing and executions, you still need to care for:

- Pushing Test guides in the repository.

- Slack(Or, any other tool you use) alerts for flaky failures.

- Devs knew how to debug and contribute further.

Epilogue

I spent 40 hours building a test suite that broke in 2 weeks. It taught me how to get smarter at it with my research and usage of the right tool at the right time.

A few words of empathy: If you’re struggling with test stability, ever-changing UIs, and time-consuming maintenance, you’re not alone. The testing pyramid is real for a reason.

Tools like MSW and platforms like Bug0 won’t magically fix bad practices, but they will amplify good ones. Testing is part of the development experience. It’s time to embrace it with equal importance to every other layer of product development and delivery.

I hope my story did not bore you, and you found it insightful. I would love to know if it resonates with you and your experience in setting up automation testing strategies for your team.

That’s all for now. Let’s connect:

-

Subscribe to my YouTube Channel.

-

Follow on LinkedIn if you don’t want to miss the daily dose of up-skilling tips.

-

Check out and follow my open-source work on GitHub.