Bringing Open JTalk to Elixir/Nerves: Make Your Pi Speak Japanese 🇯🇵

Introduction

One day my friend kurokouji asked, innocently opening a rabbit hole:

Can my Nerves-powered Raspberry Pi talk in Japanese using Open JTalk?

Challenge accepted.

I figured the answer was yes—I just didn’t know how yet. As Antonio Inoki once said:

元氣が有れば何でもできる (If you have spirit, you can do anything)

So there was no reason not to try. Worst case, I’d learn something. Best case, the Pi speaks Japanese. Thanks to modern AI tooling, we can now explore rabbit holes without getting totally lost.

The result is open_jtalk_elixir—a portable Elixir wrapper for Open JTalk, Japan’s classic text-to-speech engine.

This library:

- Builds a native Open JTalk CLI during compilation

- Bundles the required dictionary + voice assets by default

- Exposes a clean Elixir API like this:

Even if the destination was clear, the path was full of lessons. This post documents the journey—the dead ends, the design trade-offs, and how the pieces came together.

What Is Open JTalk?

Open JTalk is a well-established Japanese text-to-speech (TTS) engine widely used in research, embedded systems, and hobby projects.

It’s built from these components:

-

MeCab 0.996

— morphological analyzer that parses Japanese text into words with readings and features. -

HTS Engine API 1.10

— statistical parametric speech synthesis engine. -

Open JTalk Dictionary 1.11

— packaged UTF-8 dictionary (open_jtalk_dic_utf_8-1.11) used by MeCab. It’s derived from NAIST-JDIC and prepared specifically for Open JTalk. - HTS Voice “Mei” (MMDAgent Example 1.8)

— widely used Japanese voice model. -

Open JTalk CLI 1.11

— C-based command-line tool that ties it all together.

Despite its age, Open JTalk remains relevant—especially when you want offline, no-internet Japanese TTS in embedded environments like Raspberry Pi or Nerves.

Challenges in embedded environments

Making Open JTalk work inside a Nerves-powered Elixir firmware image isn’t trivial.

Here’s why:

1. Native Build Complexity

Open JTalk uses autotools to compile its C stack: MeCab, HTS Engine, and Open JTalk itself. These aren’t built for cross-compilation out of the box.

2. Required Assets Are Large

Open JTalk doesn’t work without its dictionary and voice model files. You need to ship:

- A dictionary (~60MB)

- A voice model (~40MB)

If you skip bundling them, your app might compile just fine… but silently fail to synthesize speech unless the user manually downloads those assets post-install.

That’s not the kind of “developer experience” we want.

The approach that worked: Vendor + Bundle + Shell Out

After exploring various approaches, I found this simple one is effective:

- Vendor and compile all C dependencies at Elixir build time (

mix compile) - Bundle voice + dictionary files into the

priv/directory - Use System.cmd/3 to shell out from Elixir to the native CLI

That means you get a fully working setup out-of-the-box, even on embedded targets like Raspberry Pi running Nerves.

If you’re concerned about firmware size—such as for minimal Nerves deployments—you can disable bundled assets like this:

OPENJTALK_BUNDLE_ASSETS=0

OPENJTALK_DIC_DIR=/data/jdic

OPENJTALK_VOICE=/data/mei.htsvoice

mix compile

You can even skip the build entirely (e.g. if prebuilt in CI):

OPENJTALK_BIN=./bin/open_jtalk mix compile

All we’re really doing is letting Elixir orchestrate the CLI, while the heavy lifting stays in native code.It might not be fancy, but it’s reliable, portable, and easy to debug.

Ideas That Didn’t Work

Before settling on the current approach, I tried several others. Here’s why they failed:

Custom Nerves System

The first idea was to build a custom Nerves system image with Open JTalk baked in. It sounds powerful—everything precompiled and baked into the firmware.

But in practice, it’s heavy for iteration and slow for development. Any time you want to tweak or test something, you’re rebuilding the world.

On top of that, even with Open JTalk included, you’d still need to wrap the CLI in Elixir and handle asset loading.

I ruled this out early—it wasn’t worth the complexity for what I was trying to build.

Elixir Native Implemented Functions (NIFs)

NIFs let you call C functions directly from Elixir—fast, no shelling out.

But NIFs come with risk: if anything goes wrong, they can crash the entire BEAM VM. Portability across different CPU/OS combinations is also tricky.

So I skipped this route too. Safer for me—and for your runtime.

Elixir Port

I’ve used Ports before—for example, in my sgp40 sensor library. It works great when input/output is simple.

Open JTalk isn’t that simple. Encoding issues, temp files, complex args… Port was more pain than gain.

Build System Details

The native stack is built via a Makefile + shell scripts. The flow:

-

Fetch Sources

Downloads and unpacks:

mecabmecab-ipadic-utf8hts_engine_APIopen_jtalk

-

Configure & Patch

Applies platform-specific patches (e.g., macOS fixes, updatedconfig.guess). -

Compile C Stack

Each lib is compiled using autotools and linked statically when possible. -

Bundle CLI + Assets

Places everything intopriv/, ready for Elixir to use.

Cross-Platform Portability

Getting it to run on:

- 🐧 Linux (x86_64 & ARM)

- 🍎 macOS (Apple Silicon)

- 🐢 Raspberry Pi via Nerves

…was an adventure.

Autotools Pain

Autotools can’t detect modern platforms without help:

configure: error: cannot guess build type

So I inject fresh config.sub and config.guess before each build.

macOS Quirks

- BSD

installdoesn’t support-D→ shim script added. - No static linking → disabled on Darwin.

Nerves Cross-Compiling

Nerves handles toolchains if you respect MIX_TARGET and pass the right env:

export MIX_TARGET=rpi4

mix deps.get

mix compile

mix firmware

Worked like a charm!

How to Use in Elixir

After mix compile, usage is dead simple:

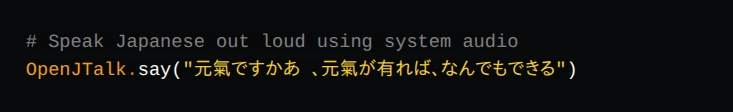

# Speak out loud using system audio

OpenJTalk.say("元氣が有れば、何でもできる")

# Synthesize to WAV

OpenJTalk.to_wav("こんにちは", out: "/tmp/test.wav")

# Get WAV as binary

{:ok, binary} = OpenJTalk.to_binary("おはようございます")

Options available

All functions accept the same set of options, which map to Open JTalk’s CLI flags but with friendlier names:

| Option | Meaning | Default |

|---|---|---|

:pitch_shift |

Semitone shift | 0 |

:rate |

Speaking speed multiplier | 1.0 |

:timbre |

Voice quality adjustment | 0.0 |

:gain |

Output gain (in dB) | 0 |

:voice |

Path to .htsvoice file |

(bundled) |

:dictionary |

Path to dictionary folder | (bundled) |

:timeout |

Max runtime in milliseconds | 20000 |

Asset Resolution

The library automatically finds the required pieces (binary, dictionary, voice) in this order:

-

Environment variables (

OPENJTALK_CLI,OPENJTALK_DIC_DIR,OPENJTALK_VOICE) -

Bundled assets: files placed in

priv/at compile time -

System locations:

/usr/share,/usr/local/share, Homebrew, etc.

You can inspect asset resolution:

OpenJTalk.info()

If you ever swap files or tweak environment variables at runtime, you can reset the cache with:

OpenJTalk.Assets.reset_cache()

Lessons Learned

- Bundle assets by default — Users expect things to “just work.” Opt-out is fine, but opt-in creates surprises and frustration.

- Respect Nerves conventions — Cross-compilation works smoothly if you let Nerves set the toolchain. Fighting it only adds pain.

- Autotools lacks modern platform support — Legacy

config.guessandconfig.subfrequently fail; replacing them is essential for portability. - macOS quirks matter — Even if you don’t use macOS, someone else will. Handle BSD tool differences and static linking limits early.

- Elixir + shelling out is a solid choice — For complex native tools, it’s simpler and safer than managing Ports..

- Keep Makefiles boring — Avoid turning Makefiles into a programming language. Offload logic to scripts for clarity and maintainability.

- Test on ARM early — x86 isn’t the default world anymore. Raspberry Pi, Apple Silicon, and cloud ARM runners should be first-class citizens.

Final Thoughts

I started with a goal: get my Raspberry Pi to talk Japanese using Elixir.

It turned into a full-blown cross-platform build system for a decades-old C library—with clean UX and out-of-the-box Nerves support.

But the end result?

That’s all we wanted.

Give open_jtalk_elixir a try, and let your Pi speak too.