Agentic AI: How LLMs Really Work Behind the Scenes

Ever wondered what really happens when you upload a PDF (or any file) into ChatGPT?

At first glance, it seems like ChatGPT just magically “reads” your file. But the truth is more fascinating — it involves Agents, Tools, and Agentic AI.

Let’s break it down.

So, what exactly are AI Agents?

An AI agent is basically a system that can think, plan, and act — kind of like a human.

You can think of it like this:

-

LLM (Large Language Model) – the brain, doing all the reasoning and planning

-

Tools – the body, actually doing things, actions and interacting with the environment

In short:

Agent → uses Tools → performs Actions

Example: Google Assistant

Say you ask Google Assistant:

“Schedule a meeting with the marketing team tomorrow.”

Here’s what happens behind the scenes:

- The agent figures out what you want (schedule a meeting).

- It decides which tool to use — your calendar app.

- Checks for conflicts, finds a time that works, and creates the event.

Essentially, the assistant thinks, plans, and acts, just like a ChatGPT agent does when handling your PDFs.

Tools: The Agent’s Body

If the LLM is the brain, then Tools are the hands that let the agent actually do things(actions) in the world.

These tools are external functions or APIs that the agent calls to get stuff done — from reading PDFs to fetching data from the web or querying databases.

Why are tools needed?

Because an LLM can only read and write text.

It can’t see images, open your PDFs, or connect to external systems on its own.

That’s where tools act as translators — bridging the gap between the model and the world.

Common examples include:

- PDF Parser

- Web Search

- Calculator

- Image Captioning

- Database Connector

- Calendar

- Weather API

Example: Checking Weather Using a Weather API

Imagine you ask ChatGPT:

“What’s the weather like in Paris this weekend?”

Here’s what happens behind the scenes:

- The agent realizes it needs real-time weather data

- It decides to use a Weather API tool

- The tool fetches the data

- The agent passes it to the LLM

- The LLM summarizes it in natural language

Behind the Scenes (Code Example):

def get_weather(location):

# Tool: Weather API

response = call_weather_api(location)

return response["forecast"]

def agent_query_weather(query):

# Thought: Understand the user request

location = extract_location(query)

# Action: Use tool

forecast = get_weather(location)

# Observation: Summarize and respond

return f"The weather in {location} will be {forecast.lower()}."

print(agent_query_weather("What's the weather in Paris this weekend?"))

Output:

“It’ll be sunny in Paris this weekend, around 23°C with light winds — perfect for outdoor plans!”

Key: The agent orchestrates the tools — the LLM doesn’t do everything alone.

System Prompts: Guiding the Agent

Every agent starts with a system prompt — it instructs the model on how it should behave.

Example: System prompt

“You are a friendly and helpful assistant. Provide concise and accurate responses.”

System prompts define tone, style, and behavior, so the AI feels more human-like.

They can also specify:

- Agent behaviour – how it behaves

- Available Tools – what it can use

- Decision-making rules – what constraints it follows while answering

The Actual Workflow:

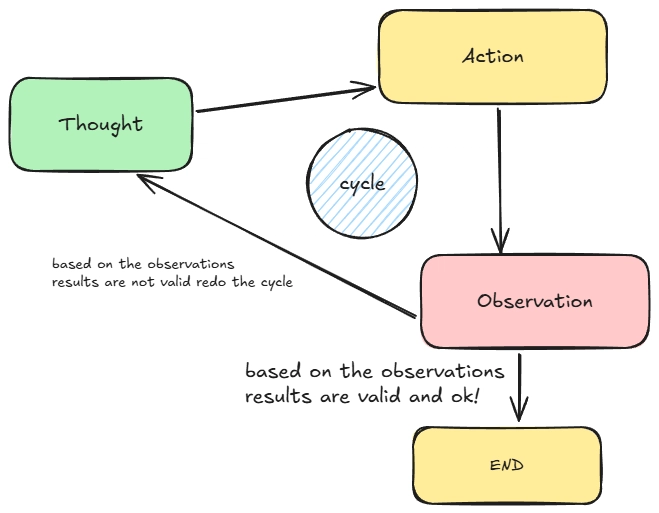

TAO Cycle — Thought → Action → Observation

Here’s a quick visual of how an Agent thinks and acts in loops:

1. Thought

The agent plans its next move — analyzing context, goals, and priorities

Techniques:

Chain-of-Thought (CoT): step-by-step reasoning

ReAct: reasoning + action combined

2. Action

The agent executes tasks: calls tools, fetches data, interacts with the environment.

3. Observation

The agent reflects on what happened, learns from it, and decides the next step.

Example: Planning a Trip to Paris

You ask:

“Plan a 3-day trip to Paris based on my interests.”

Thought: Figure out which attractions match your preferences

Action: Call tools like weather APIs, mapping tools, hotel searches

Observation: Check availability, update itinerary, suggest adjustments

The agent repeats this loop until you get a complete, optimized plan.

MCP: Smooth Tool Integration

MCP (Model Context Protocol) makes tool usage safe, efficient, and structured:

- Provides a predictable way to access tools

- Prevents misuse or chaotic tool calls

- Enables complex workflows like summarization, data extraction, and API orchestration

Think of it as the protocol that makes multiple tools work together seamlessly.

Why Agentic AI Matters?

Agentic AI turns passive models into proactive collaborators.

Agents can:

- Scan emails, extract info, and generate reports

- Coordinate multiple tools to achieve multi-step goals

- Think, plan, act, and learn continuously

Example: Generating a Company Report

Suppose you ask ChatGPT to create a report from multiple PDFs:

- The agent reads each file via the tools

- Summarizes each section

- Integrates insights

- Delivers a coherent report

All of this happens without you telling it step by step.

Bottom Line

Uploading a PDF into ChatGPT might look simple.

But behind the scenes, an agent is _reasoning, planning, calling tools, observing results, and iterating _intelligently.

This is Agentic AI — intelligence that doesn’t just respond, it acts.

We’re stepping into an era where AI won’t just assist — it will collaborate.