AI That Shows Its Work: The Transparent Revolution of PALs

“From black box to glass brain how Program-Aided Language Models are making AI accountable, explainable, and humanly intelligent.”

TL;DR

- Most AI models guess; Program-Aided Language Models (PALs) reason.

- Instead of spitting out text, they write and execute code to solve problems showing every logical step along the way.

- This makes AI transparent, accurate, and explainable a shift from black-box intuition to glass-box reasoning.

- Using LangChain and Gemini, we can build a simple PAL that turns a natural question into Python code, runs it through an interpreter, and produces a verifiable answer.

- PALs are the bridge between language and logic, neural and symbolic, creativity and computation.

- They represent the future of AI that doesn’t just answer it shows its work.

We’ve all seen it an AI that can write flawless essays, generate code, or even mimic human creativity… and yet, it confidently stumbles on a basic math problem.

How can something smart enough to compose symphonies forget how to count?

The truth is simple: today’s AIs don’t actually reason they predict. They’re masters of pattern recognition, not logic. They sound convincing, but inside, it’s guesswork wrapped in language.

When I first came across the concept of Program-Aided Language Models (PALs), it honestly blew my mind. I started thinking what if AI could actually reason? What if it could think with logic, not just language?

Back when I was studying for placements, I used AI to help me solve aptitude questions. I’d ask it to explain a problem step-by-step. Sure, it would give a detailed answer but was it always right? That’s the billion-dollar question, right? Even with all those clear steps, I’d often find myself drifting off the path and landing at a completely different answer.

Maybe that was a year before today’s models became more powerful, you might say. But even now, AI still struggles with complex, logical reasoning. It can describe what to do but often fails to do it precisely.

So what if there were a way to fix that? A way to make AI reason through computation to think, verify, and prove its logic?

That’s where Program-Aided Language Models (PALs) come in an approach that bridges the gap between human-like creativity and machine-like precision. In this blog, we’ll explore how PALs make that possible, what makes them revolutionary, and yes the challenges that come with them.

🤖 What Are Program-Aided Language Models (PALs)?

Let’s start simple.

A Program-Aided Language Model (PAL) is an AI system that doesn’t just guess the answer it builds the answer.

Instead of directly producing text like a traditional language model, a PAL writes a short computer program usually in Python that represents its reasoning steps. Then, it executes that code to get the final, verified result.

It’s like giving AI a thinking space not just to imagine, but to calculate, test, and confirm.

Think of it this way:

- A traditional AI is like a student solving a math problem entirely in their head fast but prone to mistakes.

- A PAL, on the other hand, is that same student realizing the problem’s tricky, grabbing a pen and paper, writing down the steps, and double-checking the answer with a calculator.

That’s the entire philosophy behind PALs:

“Don’t just say the answer. Prove it.”

PALs combine the linguistic intelligence of large language models (like GPT or Llama) with the logical precision of programming. The model’s job is to translate natural language into executable logic to turn “What is (13×4) + (52÷2)?” into print((13*4) + (52/2)).

Once that code runs, the AI’s reasoning isn’t just theoretical it’s verifiable. If it’s wrong, you can look at the program and debug its logic.

💡 A Simple Mental Picture

Here’s how it works under the hood:

[User Question]

↓

[Language Model (LLM)]

→ Translates the question into a program

↓

[Generated Code]

→ e.g., result = (13 * 4) + (52 / 2)

↓

[Interpreter Executes Code]

→ Runs the logic precisely

↓

[Final Answer]

→ 78.0

So, in short a PAL doesn’t just “guess” what’s right. It reasons, executes, and proves its own logic.

It’s AI that writes and runs its thoughts.

How PALs Work From Prompt to Program

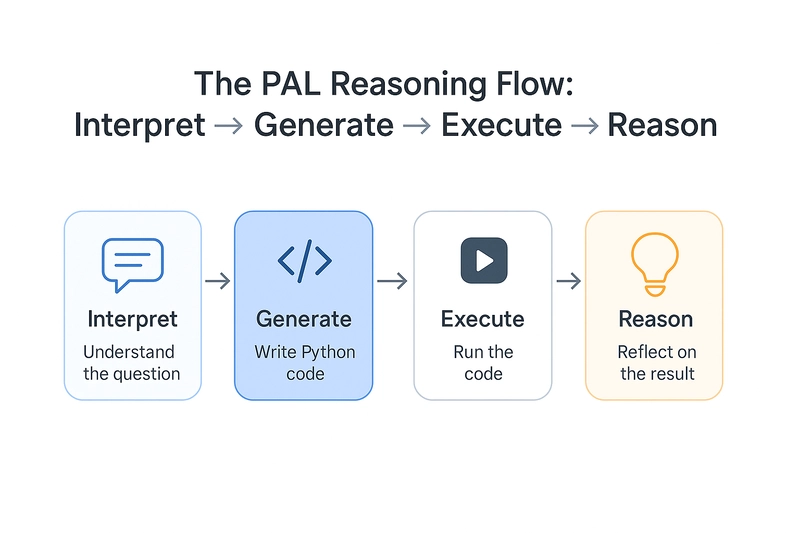

Now that you get what a PAL is, let’s peek under the hood at how it really works. PALs follow a simple but powerful cycle: interpret → generate → execute → reason.

Here’s how that unfolds step-by-step:

1️⃣ You Ask the Question

Everything starts with a natural-language query just like how you’d talk to ChatGPT:

“What is the total cost of 5 shirts at $20 each with a 10% discount?”

To a traditional LLM, that’s just a string of words. But for a PAL, that’s an instruction to compute.

2️⃣ The LLM Writes Code Instead of Words

The PAL doesn’t try to calculate the answer directly. Instead, it translates your question into a short, executable program typically in Python.

Example output from the model:

shirts = 5

price = 20

discount = 0.10

total = (shirts * price) * (1 - discount)

print(total)

At this stage, the AI hasn’t “answered” yet it has simply reasoned in code. It’s essentially saying: “Here’s how I’ll find the answer.”

3️⃣ The Interpreter Executes the Code

Once the code is generated, it’s sent to a separate component the interpreter (like Python). This part actually runs the code and returns the computed answer.

💡 Output: 90.0

So the final response you see “90.0” isn’t a guess. It’s the exact result of running verified logic.

4️⃣ The PAL Reflects and Responds

Finally, the PAL takes that result and wraps it in natural language again, giving you a clean, human-readable answer like:

“The total cost is $90 after a 10% discount.”

This closing step merges logic with language ensuring the output isn’t just correct, but clear.

Putting It All Together

You can imagine the entire process as a beautiful loop between language and computation:

User → LLM → Code → Interpreter → Verified Answer

Or, in simpler terms AI that writes its reasoning, runs it, and learns from it.

It’s like giving AI a brain for creativity and a calculator for truth.

Real-World Example: Solving a Data Problem

Okay, so PALs can handle arithmetic. But can they deal with real-world messiness data, errors, and logic chains? Let’s test that.

Imagine this: you have a small CSV file containing product sales. Some data entries are clean, others… not so much. You ask your PAL:

“From this data, what’s the total revenue and average order value for the ‘Electronics’ category? Ignore bad data.”

Now, a traditional AI might try to answer in plain text something like,

“You have 3 electronics items, and the total revenue is around $1300.”

That’s just an educated guess. It sounds confident, but there’s no proof it’s right.

A PAL, on the other hand, doesn’t gamble. It generates code to reason it out.

The PAL’s Thought Process in Code

Here’s what a PAL might write internally (simplified for clarity):

import csv

import io

data = """Category,Product,Price,Quantity

Electronics,Laptop,1200,1

Groceries,Apple,1.5,10

Electronics,Mouse,25,2

Books,Python Guide,45,3

Electronics,Keyboard,75,invalid_qty

"""

file_like = io.StringIO(data)

reader = csv.DictReader(file_like)

total_revenue = 0

product_count = 0

for row in reader:

try:

if row["Category"] == "Electronics":

price = float(row["Price"])

quantity = int(row["Quantity"])

total_revenue += price * quantity

product_count += 1

except ValueError:

continue

if product_count > 0:

average_revenue = total_revenue / product_count

print(f"Total Revenue: ${total_revenue:.2f}")

print(f"Average Order Value: ${average_revenue:.2f}")

When executed, the PAL gets:

⚡ The Output

Total Revenue: $1250.00

Average Order Value: $416.67

That’s verified logic no hallucination, no guessing, just transparent computation.

Why This Matters

This example shows how PALs understand structure and reason with precision:

- They parse data systematically.

- They handle errors gracefully (try-except).

- They use logical conditions (if Category == ‘Electronics’).

- And most importantly they provide results that can be traced and trusted.

It’s not just smarter AI; it’s accountable intelligence.

PALs bridge a crucial gap:

They think like a developer, but explain like a teacher.

Common Challenges with PALs

Like every innovation, Program-Aided Language Models (PALs) come with trade-offs. Understanding their limitations helps us see their potential more clearly.

1. Security Risks

PALs execute code generated by AI, which introduces security concerns. If not sandboxed, this could lead to unsafe operations or code injection. The solution is to run all executions inside secure, restricted environments like Docker containers or isolated runtimes.

2. Debugging Complexity

When PALs fail, it can be hard to pinpoint the cause. Sometimes the generated code crashes, which is easy to fix; other times, the logic itself is flawed. The latter requires better prompts, examples, or self-reflection loops to improve reasoning.

3. Efficiency Issues

PALs often overcomplicate simple tasks. For instance, they might generate full Python scripts for basic arithmetic. This makes them slower and more resource-intensive. Smarter PALs are now being trained to decide when to compute and when not to.

4. Prompt Sensitivity

PALs heavily depend on the quality of their examples. Poorly designed prompts lead to buggy or inefficient code. The better your prompt, the better the logic it produces.

In short, PALs aren’t flawless but they’re transparent. When they make mistakes, we can see exactly where things went wrong, and that visibility is a massive leap forward for AI trust and accountability.

Why PALs Matter: The Shift from Guesswork to Ground Truth

Traditional AI often feels like a black box it gives answers, but we never really know how it got there. PALs change that by making every step of reasoning visible and verifiable.

Instead of predicting what sounds right, PALs compute what is right. They show the logic, run it, and prove it. This transparency is what turns artificial intelligence into accountable intelligence.

PALs also represent a major mindset shift. For the first time, AI is not just responding it’s reasoning. It’s not just mimicking intelligence it’s demonstrating it.

In industries like healthcare, finance, or education, where accuracy and trust are everything, PALs could become the standard for reliability. They don’t replace human logic they reinforce it with evidence.

By moving from guesswork to ground truth, PALs redefine what it means for AI to “think.”

The Future of AI Reasoning

PALs are just the beginning of a much bigger transformation in how machines think. For the first time, we’re seeing AI move beyond pattern prediction and step into the realm of structured reasoning where logic and learning coexist.

The next frontier of this evolution lies in neuro-symbolic AI systems that combine the creativity of neural networks with the precision of symbolic logic. Neural networks excel at learning from data, while symbolic systems are built to reason, verify, and explain. Together, they form a hybrid intelligence: one that can both imagine and justify.

PALs are the first practical expression of that idea. They take the neural model’s natural language ability and pair it with the structured discipline of code a symbolic layer that enforces logic and traceability. This combination doesn’t just make AI smarter; it makes it trustworthy.

In the coming years, we can expect PALs to evolve into agents capable of multi-step reasoning, collaboration across tools, and real-time self-correction. Imagine an AI that not only writes a program but tests, refines, and explains its own process a system that learns why it’s right or wrong.

That’s the real future of AI reasoning: models that don’t just perform intelligence but understand it. PALs are the bridge leading us there from black box intuition to glass box reasoning.

Let’s See It in Action: Building a Simple PAL in Colab

To truly understand how PALs work, I decided to test one myself using LangChain and Gemini. Here’s a working Colab example that does exactly what a PAL is meant to do think in code.

import google.generativeai as genai

from langchain_google_genai import GoogleGenerativeAI

from langchain import PromptTemplate, LLMChain

import io, contextlib, re

from google.colab import userdata

GOOGLE_API_KEY = userdata.get('GOOGLE_API_KEY')

llm = GoogleGenerativeAI(

model="gemini-2.0-flash",

temperature=0,

google_api_key=GOOGLE_API_KEY

)

prompt_template = """

You are a Program-Aided Language Model (PAL).

Your job is to solve problems by writing Python code and returning only that code.

Question:

{question}

Return only valid Python code without markdown formatting, triple backticks, or the word 'python'.

Ensure the code prints the final numeric result using print().

"""

prompt = PromptTemplate(input_variables=["question"], template=prompt_template)

chain = LLMChain(llm=llm, prompt=prompt)

def execute_code(code_string):

cleaned = re.sub(r"```

(?:python)?|

```", "", code_string).strip()

buffer = io.StringIO()

try:

with contextlib.redirect_stdout(buffer):

exec(cleaned, {"__builtins__": __builtins__}, {})

result = buffer.getvalue().strip()

return result if result else "No output"

except Exception as e:

return f"Execution error: {e}"

user_question = "If a train travels 180 miles in 3 hours, what is its average speed in km/hr?"

generated_code = chain.run(question=user_question)

print("🔹 Generated Code:n")

print(generated_code)

print("n🔹 Result:n")

print(execute_code(generated_code))

OutPut:

🔹 Generated Code:

miles = 180

hours = 3

km_per_mile = 1.60934

speed_miles_per_hour = miles / hours

speed_km_per_hour = speed_miles_per_hour * km_per_mile

print(speed_km_per_hour)

🔹 Result:

96.5604

-

Gemini interprets your question and reasons through it step by step. Instead of directly guessing the answer, Gemini translates your natural language question into a logical plan expressed as Python code. This is the “reasoning” stage of the PAL process.

-

The LangChain prompt ensures structure and precision. It instructs Gemini to output clean, runnable Python no markdown, no extra text. In other words, it guides how the model reasons, ensuring clarity and reproducibility.

-

The execute_code() function acts as the “Interpreter”. This is where the magic happens the generated program is executed, just like a calculator running your reasoning steps. This separation between the thinker (the LLM) and the doer (the interpreter) is what gives PALs their reliability.

-

Finally, you get both the code and the result transparent reasoning in action. You can read the exact logic that Gemini used, line by line, and verify how it arrived at the answer. This visibility transforms AI from a “black box” into a glass box an intelligence that shows its work.

Why temperature=0

Setting the model’s temperature to 0 ensures deterministic output meaning the model always picks the most probable next token instead of introducing randomness. In PALs, creativity isn’t useful accuracy is. So a temperature of 0 guarantees consistent, logical code generation every time.

Note on LangChain’s Official PAL Model

LangChain also offers an official experimental implementation called PALChain. It’s designed to automate this entire process prompting, code generation, and execution within a single object. However, it’s still in the experimental stage, so hands-on implementations like the example above are more flexible and stable for now.

Conclusion: The Transparent Revolution of AI

When I first stumbled upon Program-Aided Language Models, I realized something profound AI doesn’t have to just predict answers; it can actually reason through them. For the first time, we’re watching machines not just think, but show how they think.

PALs remind us that intelligence isn’t just about being right it’s about being understandable. They bridge the gap between the neural and the logical, between intuition and proof. And that transparency isn’t just technical it’s philosophical. It builds trust.

Because now, when an AI gives an answer, we don’t have to take it on faith. We can see the reasoning, the logic, the steps and decide for ourselves whether it makes sense. That’s not artificial intelligence anymore. That’s accountable intelligence.

So maybe the future of AI isn’t about making it smarter than us it’s about making it think with us. Program-Aided Language Models are the first step in that direction a shift from mystery to clarity, from output to understanding, from prediction to reason.

This is the transparent revolution AI that shows its work.

🔗 Connect with Me

📖 Blog by Naresh B. A.

👨💻 Aspiring Full Stack Developer | Passionate about Machine Learning and AI Innovation

🌐 Portfolio: [Naresh B A]

📫 Let’s connect on [LinkedIn] | GitHub: [Naresh B A]

💡 Thanks for reading! If you found this helpful, drop a like or share a comment feedback keeps the learning alive.❤️