How Self-Attention Actually Works (Simple Explanation)

Self-attention is one of the core ideas behind modern Transformer models such as BERT, GPT, and T5.

It allows a model to understand relationships between words in a sequence, regardless of where they appear.

Why Self-Attention?

Earlier models like RNNs and LSTMs processed words in order, making it difficult to learn long-range dependencies.

Self-attention solves this by allowing every word to look at every other word in the sentence at the same time.

Key Idea

Each word in a sentence is transformed into three vectors:

- Query (Q) – What the word is looking for

- Key (K) – What information the word exposes

- Value (V) – The actual information carried by the word

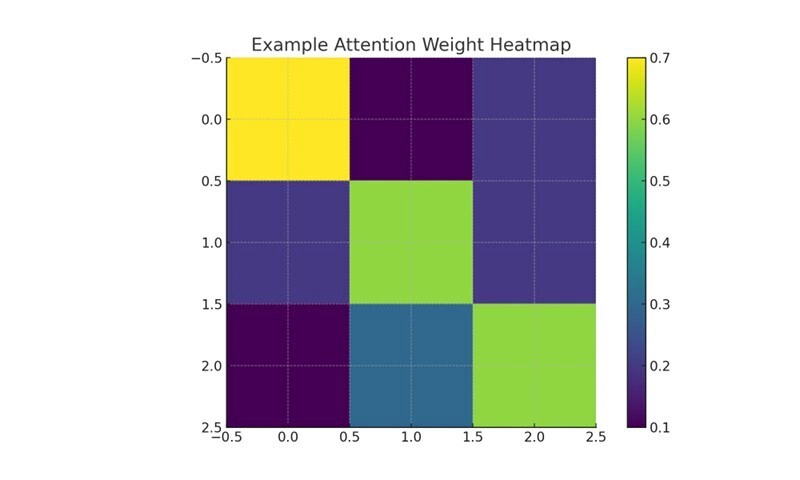

The model computes similarity scores between words using dot products of queries and keys.

These scores are then normalized (using softmax) to determine how much attention one word should pay to another.

Example

In the sentence: “The cat chased the mouse”,

- When focusing on the word “chased,” it may attend more to “cat” (the subject) and “mouse” (the object)

- Attention weights tell the model which words are relevant for understanding a given word

Multi-Head Attention

Instead of one set of Q, K, and V, the model uses multiple heads.

Each head focuses on different relationships (syntax, meaning, etc.).

Benefits of Self-Attention

- Learns long-range relationships easily

- Can process words in parallel (faster than RNNs)

- Works well for multilingual and domain-specific language tasks