Copilot is gaslighting developers and we’re all pretending it’s fine

Microsoft’s AI sidekick is writing more code than ever, but the devs maintaining it are quietly losing their sanity. Here’s why it’s not just a GitHub problem it’s an industry symptom.

Every dev’s had that moment when Copilot confidently suggests a chunk of code that looks perfect until it absolutely detonates your build. You sit there, blinking at your screen, wondering how you just got gaslit by an autocomplete.

It’s the same feeling you get when a senior dev reviews your PR and says, “Looks fine,” but you know it’s not fine. That’s Copilot energy.

The wild part? Microsoft engineers the people building Copilot are now living in that chaos too. Inside Redmond and across GitHub repos, AI-generated pull requests are quietly piling up. Some devs call it “helpful.” Others call it “Stockholm Syndrome as a Service.”

Meanwhile, dev forums, Reddit, and Hacker News have turned into Copilot group therapy. You’ll find everything from “It finished my CRUD in seconds” to “It hallucinated an entire API, and I merged it anyway.”

TL;DR: This isn’t a Copilot hate post. It’s a reality check. We’re watching a cultural shift happen in real time where AI tools are outpacing our ability to understand what they create. Microsoft’s internal engineers are the canary in the coal mine. Their struggle with Copilot isn’t just about buggy code it’s about how engineering itself is evolving (and breaking).

The honeymoon phase when Copilot felt magical

The first time you try GitHub Copilot, it feels like discovering cheat codes for coding. You write half a function name, and boom it finishes your thought like a telepathic junior dev who actually knows regex. For a few glorious weeks, you’re convinced you’ll never touch Stack Overflow again.

I remember that phase vividly. You start using Copilot to crank out boilerplate, generate mock data, even write tests. It nails 80% of what you need. You start flexing in stand-ups “yeah, I finished the API early.” You conveniently skip the part where Copilot imported the wrong version of Axios and wrote a promise chain straight out of 2015.

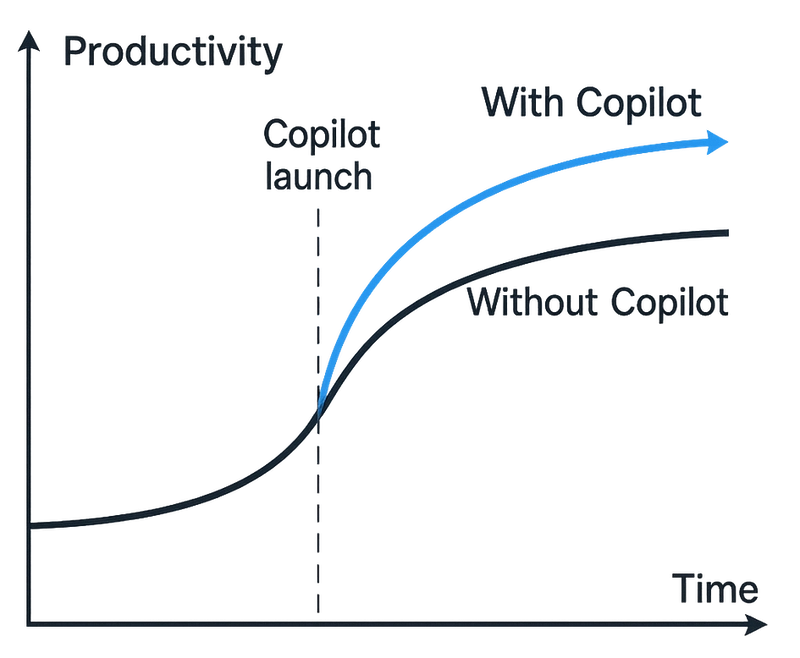

Microsoft leaned into that magic too. Their engineers showcased how Copilot “cut code review time” and “increased dev velocity.” It’s the kind of language that makes product managers salivate. The productivity graphs went up, the dopamine followed, and soon everyone was bragging about how Copilot “understood their style.”

Reddit and Hacker News threads mirrored the hype. Developers called it “the future of software.” Others joked it was “like hiring an intern who doesn’t sleep.” It really did feel like AI was finally the teammate we always wanted fast, confident, and endlessly available.

But here’s the catch: speed creates trust. And trust, in engineering, is dangerous when it’s blind. We started hitting Tab like it was a muscle reflex. Copilot didn’t just autocomplete our code it started autocompleting our thinking.

And once you stop thinking, you stop noticing. That’s where the chaos quietly began.

When autocomplete becomes autopilot

Here’s the thing Copilot was never supposed to replace us. It was supposed to assist us. But somewhere between “autocomplete” and “autopilot,” we all got too comfortable letting it drive.

You’ve seen it: that split second when you’re mid-function, Copilot whispers a 10-line solution that looks right. You nod, hit Tab, and move on. Then you merge, deploy, and six hours later, QA finds out Copilot’s “perfect” code was just confidently wrong.

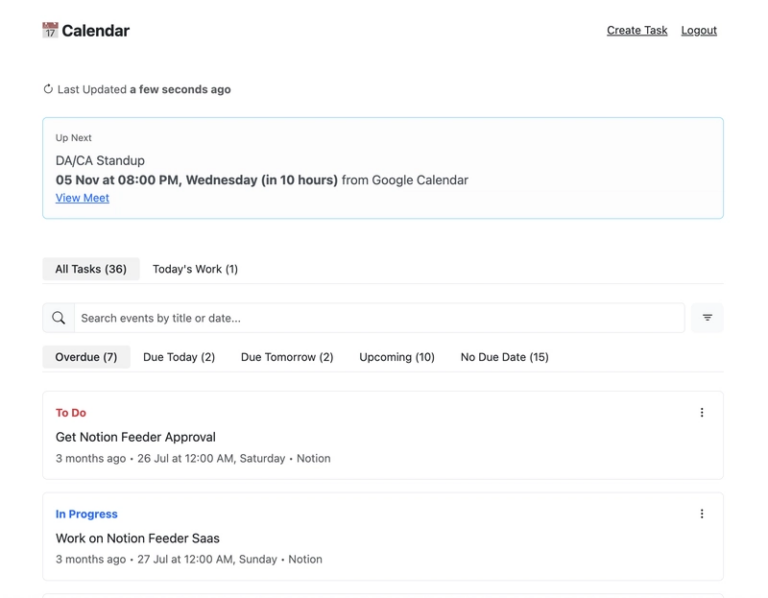

Now scale that up to enterprise. Inside Microsoft, teams reportedly found themselves reviewing PRs where 60–70% of the code came from Copilot suggestions. And sure, it works until someone asks, “Wait, who actually wrote this?” Cue the silence.

Developers start trusting the pattern, not the logic. It’s overconfidence bias in code form. When AI gets it right enough times, you stop questioning it. You skip the test. You assume it’s fine. You assume it’s you that’s overthinking things. That’s the gaslight.

It’s not just anecdotal either. GitHub’s own blog posts mention “increased velocity” and “decreased mental load.” Translation: we’re coding faster, thinking less, and debugging more. There’s a reason Reddit threads titled “Copilot made me lazy” hit the front page.

It’s like flying on autopilot with a system that doesn’t understand turbulence.

AI doesn’t “know” context it predicts it. And those predictions look stunningly accurate until they crash into edge cases.

Competitors like Cursor and Codeium are learning from this adding self-review prompts, code diff explainers, and citation systems. But the culture is already shifting. The more Copilot handles, the less devs feel responsible for the code they ship.

And that’s how we end up debugging strangers’ logic written by an AI, approved by a human who didn’t read it.

Debugging AI code you didn’t write

If you’ve ever spent a night debugging a bug that “wasn’t yours,” congratulations you’ve already experienced the future. Except now, that mystery code isn’t from a sleep-deprived teammate… it’s from your friendly neighborhood Copilot.

Here’s how it usually happens: you ship something fast, it works in dev, everyone claps in Slack. Then, three sprints later, an issue surfaces a subtle data mismatch, a cache that never invalidates, or a function that returns undefined in the weirdest corner case. You trace it back, open the file, and realize: you didn’t write this. You just… pressed Tab.

It’s a surreal feeling debugging AI-generated logic that technically came from your account. You find elegant function names and clever abstractions, but the moment you step through the code, it’s like reading an alien language that just happens to be syntactically correct.

Copilot writes like a senior dev structured, confident, maybe even poetic. But it fails like a junior who didn’t test a thing.

Researchers at Microsoft published a paper last year The False Sense of Security in AI Pair Programming showing that developers reviewing AI-generated code missed 40% more bugs than those reviewing human-written code. Why? Because AI code “looks clean.” And clean code is seductive.

There’s also what I call responsibility diffusion:

- Devs assume Copilot’s suggestions are correct.

- Copilot assumes devs will verify them

- Nobody actually does.

One Hacker News comment summed it up perfectly:

“Copilot saves me 30 minutes writing code and costs me 2 hours debugging it.”

That’s the new equilibrium productivity on paper, chaos in production.

AI didn’t eliminate grunt work; it just moved it downstream.

So when something breaks, you’re not debugging your logic anymore you’re debugging a model’s assumptions about your logic.

Dev culture vs AI-driven deadlines

The real Copilot crisis isn’t code quality it’s culture. Somewhere along the line, management saw “AI productivity” dashboards and decided engineers could magically double their velocity. Spoiler: we didn’t. We just doubled our review backlog.

You can see the ripple effects across teams. PMs love AI-generated commits they look impressive on graphs. More commits, more progress, right? Except half of those “lines of code” are later rolled back because no one actually understood them.

It’s like watching someone floor a Tesla on autopilot and brag about how fast they’re going ignoring the fact they’re headed straight into a wall of merge conflicts.

Developers feel it most in reviews. PRs that used to be 200 lines of clean logic are now 700 lines of Copilot fan fiction. And when you try to leave a comment like, “Why does this exist?”, your teammate shrugs: “It’s what Copilot suggested.”

The result? Code review fatigue.

Everything looks syntactically perfect but semantically cursed. Reviewing AI code feels like grading an essay that ChatGPT wrote about you technically correct, emotionally hollow.

This shift changes team dynamics, too. Junior devs learn less because they’re following AI patterns instead of understanding fundamentals. Senior devs spend more time cleaning up invisible messes. And managers think it’s all progress because the metrics look shiny.

Microsoft’s internal dev chats (the ones that occasionally leak onto Reddit) show a weird mix of awe and exhaustion. Some engineers swear Copilot cuts meetings and boilerplate; others say it erodes craftsmanship. Both are right.

Because here’s the uncomfortable truth: AI didn’t change what we build it changed how we think about building.

And when velocity becomes vanity, quality quietly dies.

What’s next coexistence or collapse?

By 2025, every IDE ships with “AI mode.” What started as an optional plugin is now baked into the dev stack like linting or Git blame. You open VS Code, and it’s already whispering, “Want me to finish that function for you?” It’s not the future anymore. It’s the default.

But here’s the paradox: we’re writing more code than ever, and understanding less of it. The more Copilot evolves, the less connected we feel to the logic beneath our own commits. You can almost sense it engineers aren’t shipping software anymore, they’re shipping suggestions.

So what’s the path forward? It’s not ditching AI; that ship’s sailed. It’s coexistence with discipline.

- Write code reviews like they matter again.

- Keep a “code journal” to remind yourself why decisions were made.

- Use AI as a sparring partner, not a crutch.

- Slow down once in a while and actually read the diff before merging.

And maybe, just maybe, stop bragging about 10x productivity if half of it goes to debugging Copilot’s “creative liberties.”

There’s an old-school mindset worth reviving here craftsmanship. The quiet pride of code you fully understand. The joy of a function that passes tests not because AI said so, but because you made it elegant.

Mildly controversial take: the best AI engineer isn’t the one with the most automation it’s the one who still writes boring manual tests.

Soon, Copilot will review Copilot. Multi-agent IDEs are already experimenting with self-verification loops. But if we’re not careful, we’ll end up in a recursive blame game where the machines argue over which AI caused the bug.

AI won’t replace engineers but it might replace craftsmanship if we let it.

Helpful resources

If you want to dive deeper or fact-check some of the receipts behind this piece, here’s a short list worth bookmarking:

-

GitHub Copilot official documentation setup, configs, and team usage data.

-

Reddit: r/programming “Copilot horror stories” real dev chaos, daily.

-

Hacker News: “AI code review burnout” threads sharp takes from senior engineers.

-

Devlink Studio: AI workflow hygiene guide how to use AI tools without losing your sanity