Rethinking Playwright MCP: From Chat Prompts to Feature Files

I’ve been using vanilla Playwright for almost two years now, and when Playwright MCP was introduced, I was immediately fascinated. The idea of letting an LLM write and execute your tests just by chatting with it felt straight out of science fiction. I watched demo after demo—each one using a different AI-first IDE like Cursor, Claude Desktop, or VSCode extensions—to prompt the agent and run the test.

While the tech is undeniably impressive, I couldn’t shake the feeling that something wasn’t clicking for me.

💭 The Problem With Chat-Driven Testing

As much as I loved the concept, the idea of testing an application through a conversation felt impractical in real-world workflows. Test case creation requires structure, repeatability, and collaboration—not just creative phrasing. Having to rely on a custom IDE or chat agent to generate every test step felt limiting and hard to scale or automate in CI pipelines.

🧠 A Thought: Why Not Use Cucumber?

Cucumber.js has been around for a long time and allows us to write test cases in plain English using .feature files. The structure is clear, readable, and collaboration-friendly. So I thought: Why not bring Cucumber and Playwright MCP together?

Here’s the twist: In traditional Cucumber, we need step definition files with all the code logic behind each Given/When/Then. But with MCP, the step execution is now the LLM’s responsibility. So what if the feature file could just be the source of instructions?

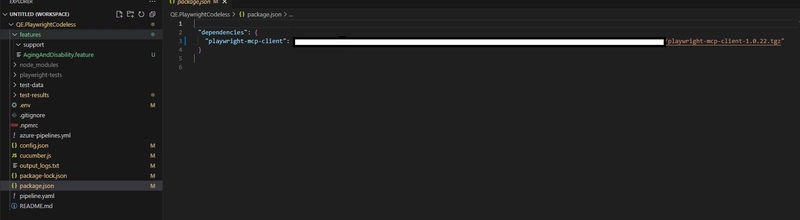

🧪 What I Built: A Custom MCP Client

To remove the dependency on IDEs and chat windows, I created a custom Playwright MCP client with support for:

- 🧾 Default

.featurefile structure - ⚙️ Simple config for setting up your LLM (e.g., API key, provider)

- 🪝 A generic step definition that delegates steps to the local MCP server

- 🧪 Normal Cucumber test execution via CLI

- 📄 Auto-generated HTML reports (thanks to Cucumber)

- 🎭 Integration with Faker.js for generating mock data when needed

In this setup:

- You write your test in plain English (as if you were chatting).

- The client runs the test as a standard Cucumber test.

- The steps are sent to the local MCP server.

- The response is executed via Playwright.

- Reports are generated just like any other BDD test.

Since it’s a CLI-based tool, it runs the same way in a CI/CD pipeline. No IDEs required.

❓ Am I Missing Something?

This approach feels like it bridges structure and innovation, but I’m still wondering—is it too good to be true? Could this be why I don’t see many people talking about such integrations? Are there deep limitations in MCP or LLM reliability that prevent this from going mainstream?

I’ve seen many tech YouTubers promoting Playwright MCP, but they’re mostly using chat-style workflows in enhanced IDEs. I haven’t seen much on structured, prompt-based testing outside the IDE.

💡 Final Thoughts

While there are clear stability and consistency issues with LLM-generated code and MCP’s maturity, I remain optimistic. The foundational idea—structured natural language tests with AI-backed execution—feels like a big step forward in how we think about test automation.

I’d love to hear what others think. Is this a viable pattern, or am I overlooking something critical?

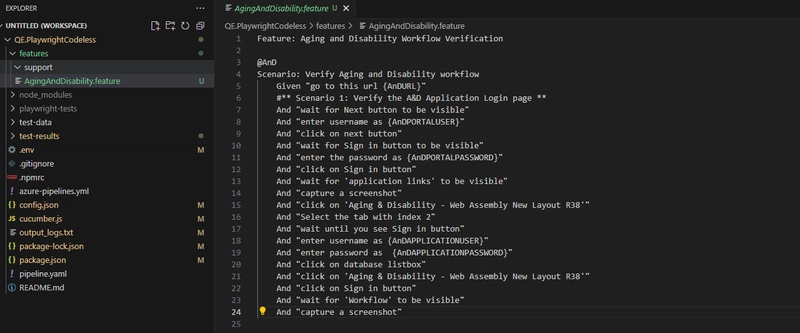

Some visuals are as below.. I have masked some critical info since its private to my ORG.

-

Create the testcase in a .feature file,whatever is in {}, will be referred from .env file from the project root on the fly. Note that there are not step definition under the support folder. That part is dynamically taken care by client.

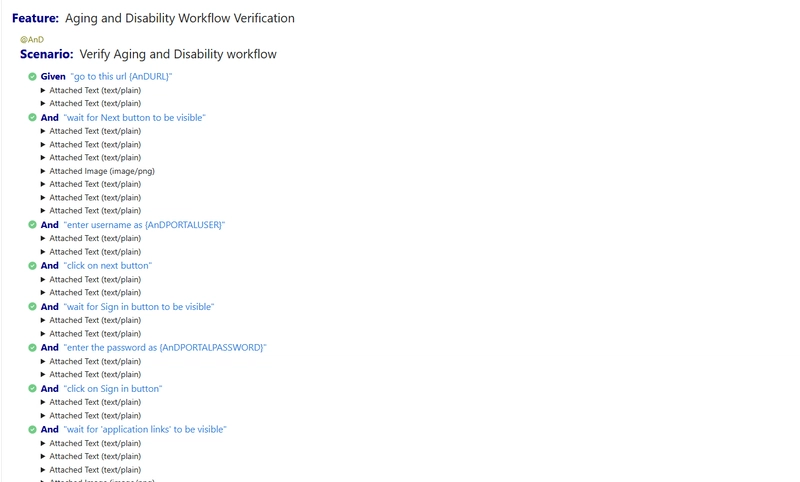

- Execute the testcase and you would see the exact same things that you were seeing while chatting with the IDE agent. On top of this you can take helper util for test data management, mock test data generation etc. Finally you see the cucumber html report. I wanted to capture the MCP and LLM interaction so for debugging purpose have attached the interaction with test step.

Since playwright MCP supports test code generation as output, i made it configurable to dump the playwright javascript code as the output once execution is done

Let me know if something is wrong with the approach since this all came of of limited knowledge and imagination, off course AI agents were used to translate the imagination in working code