One-Stop Developer Guide to Prompt Engineering Across OpenAI, Anthropic, and Google

One-Stop Developer Guide to Prompt Engineering Across OpenAI, Anthropic, and Google

As developers building with LLMs (Large Language Models), we’re not just writing code—we’re crafting conversations, instructions, and data-driven requests that machines can interpret and execute. That’s where prompt engineering comes in.

Whether you’re integrating OpenAI’s GPT-4, experimenting with Claude by Anthropic, or deploying apps using Google’s Gemini models—understanding how to write effective prompts is essential to building reliable, scalable AI systems.

Why Prompt Engineering Still Matters

While the long-term goal is natural and intuitive interactions with AI, today’s systems require well-structured, role-based, and context-aware prompting to reduce hallucinations, boost accuracy, and ensure trust.

Below is a comprehensive set of official resources released by OpenAI, Anthropic, and Google—ideal for developers at any level:

✅ OpenAI: ChatGPT 4.1 Prompt Engineering Guide

Official Guide: https://platform.openai.com/docs/guides/prompt-engineering

OpenAI’s guide focuses on formatting structure for ChatGPT and GPT-4.1, emphasizing the power of system messages and role assignment.

Key Developer Takeaways:

- Use

systemmessages to define behavior and tone - Structure multi-turn conversations using

userandassistantroles - Improve performance with few-shot examples, step-by-step instructions, and function calling

- Techniques to reduce drift, prevent hallucinations, and improve consistency in responses

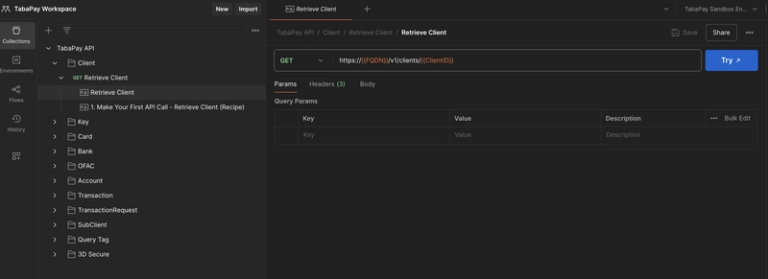

✅ Anthropic: Claude Prompt Engineering Interactive Course

GitHub: https://github.com/anthropics/prompt-eng-interactive-tutorial

Google Drive: https://drive.google.com/drive/folders/148YBrLaqPdI-EUI7DMXIAJ9ErrWAUPv0

One of the most developer-friendly, hands-on tutorials for Claude AI. Created by Anthropic, it provides real-world prompt patterns across experience levels.

Course Modules:

- Ch. 1–3: Direct prompting, clarity, role definition, formatting

- Ch. 4–7: Use of examples, step-by-step thinking, output formatting

- Ch. 8–9 + Appendix: Avoiding hallucinations, advanced reasoning, chaining prompts, API calls

Developer Highlights:

- JSON-based prompt formatting

- Domain-specific templates (legal, chat, finance, dev)

- Best practices for safety, fairness, reliability

“That’s called prompt engineering. There’s an artistry to that.”

— Jensen Huang, CEO of NVIDIA

✅ Google: Prompt Engineering Guide for Gemini

Official Guide: https://cloud.google.com/vertex-ai/docs/generative-ai/prompt-engineering/best-practices

Google’s guide is aimed at production-level AI deployments using Vertex AI and Gemini. Best suited for scalable, enterprise-grade solutions.

Key Developer Takeaways:

- Few-shot vs zero-shot prompt comparison

- Chain-of-thought prompting for intermediate reasoning

- Grounding techniques to reduce hallucinations

- Structured outputs (JSON, Markdown, Tables, etc.)

- Support for multimodal prompting (text + image)

“Prompting is a new programming paradigm.”

— Google DeepMind Prompt Engineering Team

🛠️ Use Case Snapshot

| Platform | Key Strengths | When to Use |

|---|---|---|

| OpenAI | System message control, function calling, tooling | Assistants, copilots, APIs, RAG setups |

| Claude | Long-form reasoning, ethical frameworks | Regulated environments, chat, compliance |

| Multimodal support, enterprise-ready deployment | Scalable apps, Vertex AI, production LLMs |

🔧 Developer Applications

- AI assistants and smart chatbots

- Legal and financial document QA

- Conversational APIs and RAG systems

- Code generation and step-by-step explanations

- Structured data generation for workflows

- Secure and factual knowledge retrieval bots

#PromptEngineering

#AIForDevelopers

#OpenAI

#ClaudeAI

#GoogleAI

#LLMs

#GenAI

#Anthropic

#VertexAI

#Gemini

#GPT4

#KenanGain

#AIEngineering

#AIResources

#DeveloperTools

#AIProductDevelopment