From Monolith to Microservices: Lessons Learned Migrating the CSV Payments Processing project (Part One)

I’ve been building a CSV Payments Processing system, based on a real-world project I’ve worked on at Worldfirst.

https://github.com/mbarcia/CSV-Payments-PoC

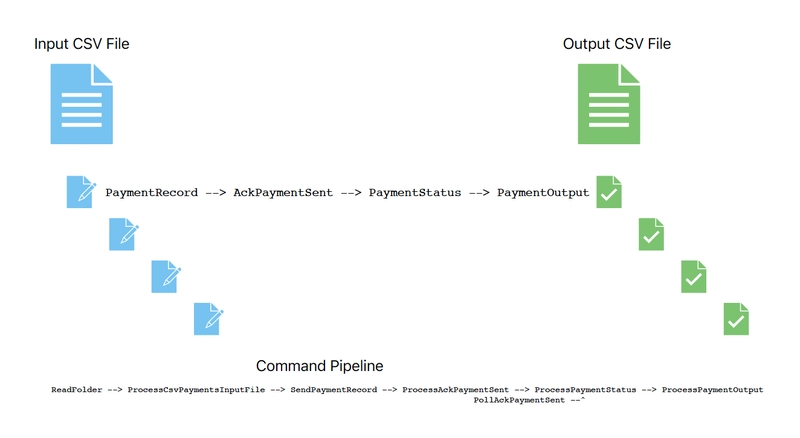

Originally, I set out only to write a better version using a “pipeline-oriented” design based on the Command pattern.

The idea was to use the project as a sandbox/playground, that could also serve as a proof of concept and to communicate new ideas to other people.

About the time I had finished what I originally set out to do, I started exploring microservices at Commonplace, along with a better automated testing strategy.

So, although I was happy with how the project achieved its original goals, as I was making progress learning all about microservices, I decided to evolve the project even further. First things first, I was interested in showing how easy automated testing was when the underlying design is good. Hence, I got it to a point of full meaningful testing coverage after a short time, enlisting the help of AI to write unit tests. Yeah I know, TDD right?

By then, I had also achieved a good understanding of microservices, not least because I have been managing the Kubernetes-based Commonplace platform, and decided to apply these learnings to the CSV project.

The big breakup

As a prerequisite, I had to decide how I was going to do the breakup of the system into smaller services. This merits a blog post on its own but, long story short, I decided to breakup the application more or less into

- An input streaming service (parse CSV files)

- An output streaming (write CSV results)

- A number of pipeline steps (send payment, polling, produce output).

- An orchestrator (read folder, orchestrate pipeline steps, handle errors), inspired by the Saga pattern.

Migrating from Springboot to Quarkus 3

Originally, the project started as a Springboot CLI app, classic. Again enlisting the help of ChatGPT, I managed to refactor a Springboot CLI application into a multi-module microservices architecture over the course of a few intense sessions.

This was no copy-paste exercise. Along the way, I uncovered a treasure trove of lessons in Quarkus configuration, build systems, dependency management, and inter-service communication. A part of me knew I had started my journey into The Rabbit Hole though, so let’s start from the beginning: Maven dependencies.

Build Mayhem: Maven vs. Gradle

I considered switching to Gradle for its composite build capabilities but stuck with Maven for simplicity and IntelliJ IDEA support. Quarkus works well with Maven multi-module projects, and IDEA recognizes and manages them cleanly—even when all modules live in a single Git repo.

Still, Maven wasn’t always smooth sailing. Issues included:

- Mojo execution errors due to conflicting plugin configurations

- Obsolete Java version warnings (

source 8 is obsolete) - Javadoc plugin failures due to undocumented interfaces

- Lombok not being picked up by the Maven compiler (even though it worked in the IDE)

Each problem required its own fix—from upgrading plugin versions to tweaking maven-compiler-plugin settings and suppressing irrelevant warnings.

Toward Microservices: Structuring and Sharing Code

The monolith eventually gave way to a structured microservice approach. Early on, I realised it was going to be far easier to share a “common” domain module as I wasn’t going to get things right on the first attempt. So, I created such common/shared module, which housed reusable domain classes and interfaces shared across services.

Here I faced a critical architectural decision: how should services communicate?

Thoughts on synchronous and asynchronous communication

Async communication seems to be the preferred method of communication between microservices. But, I see async as a higher-level tier wrapping or adapting the tier of sync APIs. I see a microservice taking gRPC and REST calls now, and responding to asynchronous events in the future. Naturally then, I decided to go with a synchronous model first.

The sync vs async topic is quite interesting. Adding a constellation of message queues as the “glue” between microservices needs justification in my opinion. SQS or Kafka are nice, but they are also complex.

If you take Project Loom’s virtual threads (=compute density), structured concurrency, Quarkus, Kubernetes high availability, and Mutiny streams (with back pressure and retry w/backoff), you have pretty much solved the issue of availability of distributed services running remotely on heterogeneous hardware capacity (and therefore, varying availability).

Is it too foolish to think that async comms might become irrelevant in the future, in cases like a greenfield internal project? If sync comms get much better, it might just become the preferred choice.

But as I said: the two comms models are not mutually exclusive in my humble opinion. For me, it was absolutely fine to do sync comms at the beginning, and leave async for phase 2 when the services are deemed robust enough. Of course, I expect asynchronous to require some re-factoring, but it should mostly be a “wrapper” of the existing stuff, plus the addition of the feature itself.

Very curious to hear comments on this topic!

Choosing gRPC Over REST

REST felt unnecessarily heavyweight for intra-service communication within the same organization, especially when services live on the same cluster or host. I settled on gRPC for its:

- Efficient binary protocol

- Built-in support for streaming

- Strong API contracts via

.protofiles - Mature integration with modern dev stacks and Kubernetes

It was clear that gRPC offered the best mix of performance and developer productivity—especially when paired with Quarkus’s excellent Protobuf tooling.

Later on, I’d learn about Vertex and its dual support for gRPC and REST. Again, another non mutually exclusive choice! Happy days!

Kicking things off: A CLI App with Quarkus and Picocli

The CLI tier shifted to a Quarkus-based CLI tool using @QuarkusMain and QuarkusApplication to bootstrap logic. Added some much needed conveniency features along the way though. Early challenges included:

- Passing command-line arguments (e.g.,

--csv-folder) via Picocli - Getting

@Injectto work properly within CLI apps - Managing configuration overrides for testing without relying solely on

@QuarkusTest

Eventually, I settled on combining Quarkus’s DI with @QuarkusMain and Picocli’s @CommandLine. It took several iterations to get the CLI runtime and testing environment to coexist without interfering.

Takeaways

- Quarkus apps are powerful, but require careful setup when combining dependency injection, Picocli, and testing.

- Multi-module Maven setups scale well, especially with good IDE support. But be ready to fight your tooling when things go wrong.

- Code sharing needs structure: create clean interfaces and use tools like Lombok and config mapping to reduce boilerplate.

- gRPC is ideal for internal microservice communication, offering the speed and contract-first development REST often lacks.

Coming up on Part Two

I was much impressed with Quarkus, its capabilities and ease of use. Having broken up the Springboot app into these new foundational modules, I felt excited and wanted to keep on going at a steady pace.

Next steps involve things like defining proper .proto APIs, building mapper classes (MapStruct), redefine concurrency processing, and more.

–

Cover photo by Sincerely Media at Unsplash