# The 85% of Success They Never Taught You to Code

You can ship solid work for months, close tickets, fix the flaky pipeline, and still feel invisible. Then someone else gets the project lead role, or your “quick analysis” turns into a cross-team fire drill, and you’re the one stuck explaining why the numbers changed overnight.

That moment stings because it exposes a quiet truth: most outcomes in tech don’t hinge on the cleverest query or the cleanest architecture. They hinge on clarity, on how you work with people, and on how you learn when the ground shifts. The hard skills matter, but they rarely decide who gets trusted with bigger scope.

This post is a practical map of that missing 85%. Not theory, not motivational fluff. These are habits you can practice this week, even if your calendar is already a mess.

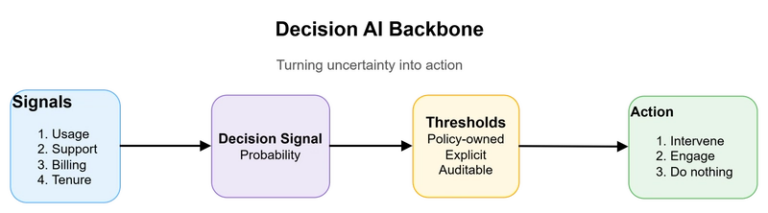

The real work happens before you write code (clarity, context, and tradeoffs)

Strong analysts and engineers don’t “move fast” by typing faster. They move fast by shrinking uncertainty before the first commit or notebook cell. They ask the questions that stop rework, surprise stakeholders, and prevent the classic ending: a technically correct output that no one uses.

In data work, the trap is extra sneaky because it’s easy to start early. You can pull tables, train a baseline model, or build a dashboard shell without anyone stopping you. But if the goal is fuzzy, you’re just producing motion. It feels productive until someone asks, “Why does this matter?” and you realize you never pinned down the decision the work was supposed to support.

This is also where many teams get confused about timelines. What people call “estimation” is often a scope and deadline negotiation with power dynamics, risk tolerance, and incomplete requirements baked in. If you don’t surface tradeoffs early, you end up “agreeing” to a date that was never realistic, then paying for it later with weekends, quality cuts, or quiet blame.

Ask better questions so you build the right thing

Your first job isn’t to build. It’s to understand. That means asking for business context, constraints, and a shared definition of done, in plain language. If you need a quick reference, resources like this set of data science scoping questions are useful because they force clarity before implementation.

For analysts, “context” is not a nice-to-have. It’s the difference between a helpful model and a model that gets shipped, ignored, then quietly turned off.

Copy this mini checklist into Slack or a ticket the next time work arrives vague:

- Who is the user? What role will act on this output?

- What decision changes? What do they do differently when this is done?

- What metric moves? Revenue, cost, risk, time, compliance, customer churn?

- Why now? What breaks if we do nothing for 30 days?

- Constraints to confirm: data sources, freshness, definitions, platform versions, security rules, latency targets, and any “must-use” tools.

- Definition of done: what artifact ships (dashboard, table, model API, written memo), how it’s validated, and who signs off.

When you ask “why now?” you also protect your focus. It’s a polite filter that keeps urgent work from hijacking important work. It also signals senior behavior: you’re thinking about priorities, not just tasks.

Estimate honestly, then negotiate scope like a pro

A forecast is not a promise. It’s a prediction based on what you know today. That sounds obvious, yet people still treat the first number they hear like a commitment carved into stone.

If you want more accurate timelines, stop estimating large blobs of work. Break it into smaller chunks, deliver in slices, and measure throughput. This works for analytics too: define a thin slice like “validate the target label and baseline lift,” or “ship a dashboard with three core metrics and known gaps.”

For data science teams, frameworks like this step-by-step estimation approach are helpful because they separate exploration from build work, and they make risk visible instead of hidden.

When the timeline is aggressive, calm pushback beats resistance. Use options with tradeoffs:

Option A: “We hit the date by shipping a baseline with clear caveats, then iterate.”

Option B: “We keep quality and include monitoring, but we need two more weeks.”

Option C: “We can keep the date and quality, but we cut scope (drop segmentation, reduce data sources, or delay automation).”

This keeps the conversation solutions-focused. It also makes it clear you’re not blocking. You’re steering risk.

People skills that quietly decide who gets trusted, promoted, and remembered

Technical careers often pretend to be pure merit. But real teams run on trust. Trust is built through reliability, clear communication, and how you act when things go sideways.

This matters even more in hybrid setups. When your team can’t see you thinking at your desk, they only see your outputs and your messages. If your updates are vague, your decisions aren’t written down, and you vanish for hours during a production issue, you’re hard to rely on even if you’re brilliant.

Promotion decisions also reflect this. Many orgs promote the person leaders feel safe handing risk to. That’s usually the person who communicates clearly, stays steady under pressure, and helps others succeed. LinkedIn’s research on soft skills tied to promotions lines up with what most of us have watched happen in real time.

Photo by Luis Sevilla

Be easy to work with, even when things go wrong

“Easy to work with” doesn’t mean passive. It means you’re respectful, direct, and consistent. You can disagree without turning it into a personal fight. You can be firm about scope without acting like the gatekeeper of truth. You don’t dump blame on the nearest person when a dashboard breaks five minutes before the exec review.

The uncomfortable part: people remember attitude longer than they remember a clever solution. A clean model and a sharp analysis help, but your reputation often comes from how you made others feel during the messy parts.

Here are quick signals to avoid if you want trust to compound:

- Defensive replies like “It’s not my code” or “The data is bad” with no next step.

- Vague updates like “Working on it” instead of what’s blocked and when you’ll check back.

- Gatekeeping where you hoard knowledge, avoid documentation, or make yourself the only path to progress.

Instead, aim for steady behaviors: acknowledge problems fast, state what you know, state what you don’t, propose the next check-in, and follow through. Reliability beats intensity.

Write things down, make yourself replaceable, and stop being the bottleneck

If you want to level up, stop being the hero. Hero mode feels good in the moment because you’re needed. Long-term, it makes the team fragile, and it caps your growth because you can’t take bigger scope while everything routes through you.

Light documentation is one of the easiest ways to raise team speed without extra hours. It also protects your future self. When someone asks “why is this metric defined this way?” three months later, your decision log is the difference between confidence and panic.

A few simple artifacts go a long way:

- A one-page decision log for key metric definitions and modeling choices.

- A short runbook for recurring tasks (refresh steps, backfills, incident triage).

- A PR or notebook template that forces context, assumptions, and validation notes.

- Small automation scripts for repetitive work (data checks, report generation, environment setup).

- Clear handoffs that list owners, dependencies, and what to do if things fail.

If you need structure, guides like this runbook best practices overview can help you keep docs practical instead of turning them into a book no one reads.

Another benefit people underrate: written records help with forecasting and performance reviews. It’s hard to argue impact when all the proof is trapped in DMs and half-remembered meetings.

The long game: a learning system that beats hustle and hype

Tools change fast. Titles change fast too. What doesn’t change fast is how value gets created: understanding the problem, choosing tradeoffs, and building systems people can maintain.

Many smart people stall because their learning becomes scattered. A bit of Python here, a new LLM framework there, a weekend on a cloud cert, then nothing sticks. It’s like trying to fill a bucket with a hole, you stay busy, but you don’t get deep.

The answer isn’t more hustle. It’s a learning system: a small routine, a clear focus, and feedback so you don’t drift for months. If you want a sanity check on what matters this year, this 2026 data skills roadmap is a useful reminder that fundamentals still pay rent.

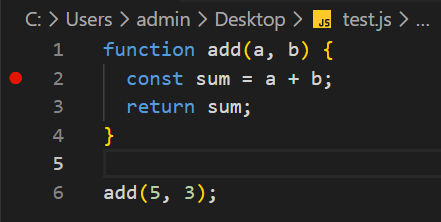

Master fundamentals so new tools feel familiar

Fundamentals are the “things that don’t change” quickly. When you know them, new libraries feel like different syntax for the same old ideas.

For data analysts, data scientists, and programmers, a strong fundamentals set usually includes:

SQL and data modeling basics (joins, grain, slowly changing dimensions, data quality patterns).

Statistics basics (sampling, bias, uncertainty, and what p-values do and don’t say).

Systems thinking (where latency comes from, what breaks in distributed jobs, why retries matter).

Debugging and testing habits (unit tests for transforms, checks for nulls, contracts for schemas).

Performance basics (query plans, indexing concepts, caching, partitioning).

Architecture tradeoffs (batch vs. streaming, serving vs. offline, central vs. federated metrics).

The win is emotional as much as technical. When fundamentals are solid, you don’t panic every time a “new standard” shows up. You can evaluate it, map it to known patterns, and decide if it’s worth your time.

Use focus, mentors, and routines to grow faster without burning out

Pick a clear 6-month focus area. Not a wish list. A lane. Examples: “become dangerous in SQL and dbt,” “own CI/CD and deployment for data products,” or “level up Python for production pipelines.”

Then set a small weekly block, 2 to 3 hours, and guard it like a meeting with your most demanding stakeholder. The point is consistency, not intensity.

Feedback cuts wasted effort. A mentor, a strong peer, or even a structured community review can keep you from building habits that look productive but don’t transfer to real work. Good mentors shorten the path by telling you what to ignore.

Don’t ignore health, either. Sleep and stable energy are not lifestyle content, they’re performance multipliers. If your brain is your main asset, protect it. Fewer energy crashes means better judgment, cleaner work, and calmer communication when pressure spikes.

A simple system that works for most people is: plan, practice, ship, reflect. If you repeat that loop, you’ll grow without living in “always behind” mode.

Conclusion

If coding were the whole job, promotions would be easy. But most career outcomes sit in the other work: clarity before code, people skills that build trust, and a learning system that keeps you current without chaos.

Next week, try this short plan:

- Start one task by asking “who is this for, and what decision changes?”

- Turn one vague request into options (A/B/C) with scope and tradeoffs.

- Write a one-page decision note or runbook for something you do often.

- Block 2 hours for a focused skill, then ship a small artifact from it.

- Ask one peer for feedback on your communication, not your code.

The goal isn’t to become a different person. It’s to build a reputation for reliability and clear thinking, the kind people bet on when stakes rise.