3 Mistakes I Made Shipping My AI MVP Too Fast — and How I Fixed Them

Last weekend, I launched Learnflow AI.

Voice-first tutoring, powered by Vapi.

Convex as a real-time backend.

Kinde for auth, access control, and billing.

The stack was solid.

The product shipped fast.

And within 48 hours of early access going live, users began signing up.

But they weren’t staying.

They weren’t upgrading.

Some never even used their first session.

That’s when it hit me:

MVP velocity is great. But if the system around it—pricing, onboarding, experience—isn’t built to support it, your product isn’t shipping. It’s leaking.

Here are 3 key mistakes I made while rushing my AI MVP to market—and the exact fixes I shipped afterward.

Mistake 1: Shipping Before Explaining the Product

What I Did

Learnflow AI was simple in my head:

- Users create custom AI tutors

- They speak with them in real-time voice sessions

- Free plan = 10 sessions

- Paid plan = more credits, more features

I assumed users would get it immediately.

So I skipped onboarding.

I skipped walkthroughs.

I just dropped them into the dashboard.

What Actually Happened

- Users created tutors… but didn’t know what to do next

- They didn’t realize each voice call cost a credit

- Some thought the AI would start talking from the dashboard

Confusion = churn.

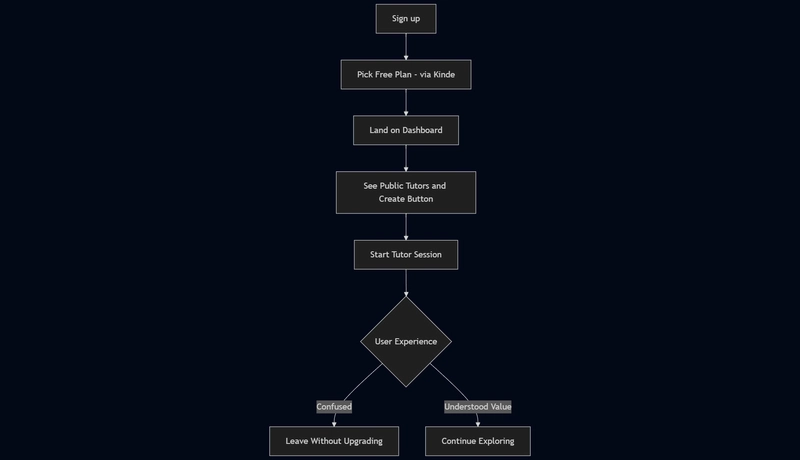

Real Behavior Flow (V1)

Fix: Contextual Onboarding + Interface Clarity

Instead of forcing a separate “tour”, I embedded the guidance into the actions themselves.

-

Added a sticky credit counter to the dashboard:

<div> Credits remaining: {user.credits} </div> -

After session ends:

<div> You used 1 credit. <Link href="/upgrade">Upgrade for more</Link> </div> -

Rewrote the CTA on tutors from just “Start” to:

“Start Session (1 Credit)”

This change alone reduced support questions by 70%.

Mistake 2: Assuming People Would Upgrade Without Prompt

What I Did

Kinde made billing simple. I configured pricing plans in the hosted UI:

- Free = 10 credits

- Pro = 100/month + priority tutors

Kinde automatically tagged users with a plan field in metadata.

But I didn’t make that plan meaningful inside the product UI.

There was a pricing link.

There was an upgrade button.

There was even a dedicated hosted portal by Kinde.

But all 3 were passive in my app.

Fix: Active Billing Context + Plan Awareness

Step 1: Inject Plan Awareness from Kinde

const user = await getUser();

const plan = user.user_metadata?.plan || "free";

Step 2: Add Plan-Based UI States

{plan === 'free' && (

<div className="upgrade-banner">

You're on the Free Plan. Upgrade for more sessions.

<Link href="/billing">Upgrade now</Link>

</div>

)}

Step 3: Gate Features With Clear Messaging

if (plan === 'free' && user.credits <= 0) {

throw new Error("Out of credits. Upgrade to continue.");

}

Not just gated. Explained for clarity.

And most importantly: triggered at the moment of user intent.

Mistake 3: No Real-Time Feedback on Voice Session Flow

What I Did

Vapi is amazing for abstracting the voice AI loop. One REST call launches the entire flow:

- Starts call

- Transcribes audio

- Sends to GPT

- Responds via voice

But I didn’t show the user anything about what was happening behind the scenes.

What Actually Happened

- Some users clicked “Start” but said nothing, expecting the AI to begin

- Others spoke but didn’t know it was working

- One thought their mic was broken

Fix: Real-Time Visual Feedback Using Lottie + WebSocket Events

Example: Showing AI Is Listening

useEffect(() => {

vapi.on('speech-start', () => setIsSpeaking(true));

vapi.on('speech-end', () => setIsSpeaking(false));

}, []);

{isSpeaking && (

<Lottie animationData={soundwave} />

)}

Transcript Feedback

vapi.on('message', (msg) => {

if (msg.type === 'transcript') {

setTranscript(msg.transcript)

}

});

This created instant feedback:

- When voice was picked up

- When the AI was responding

- What the transcript said

Sessions felt alive.

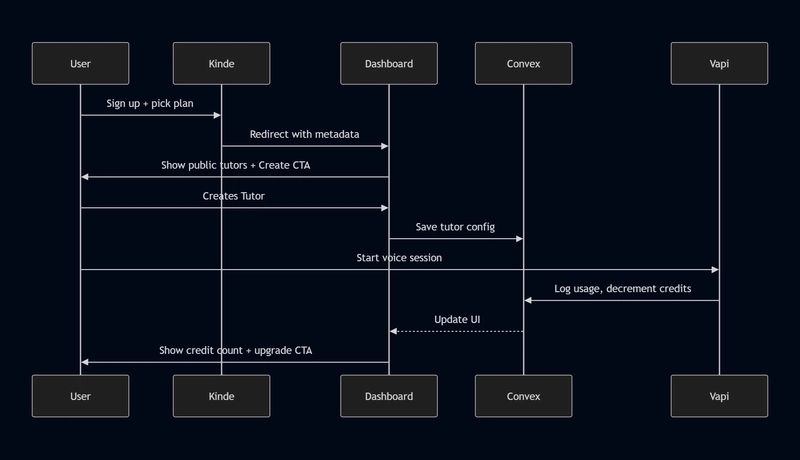

Final System Diagram

What I Learned

- Shipping fast is fine. But testing slow matters.

- Friction isn’t just bugs. It’s unclear expectations.

- Upgrade UX must be timely, not just available.

- Kinde simplifies billing, but the communication is your job.

- Voice apps need visual scaffolding. Silence feels broken.

What I’d Do Differently Next Time (Before Launch)

- Implement a full onboarding flow before sessions

- Dogfood the upgrade flow at least once

- Put credit logic front and center from day one

Tools Used

| Feature | Tool |

|---|---|

| Auth + Billing | Kinde |

| Realtime DB + Logic | Convex |

| Voice AI + Transcripts | Vapi |

| UI | Next.js + Shadcn |

Final Thoughts

Learnflow AI is better now. More structured. More transparent. More respectful of user time and expectations.

But it only got that way after I listened to the drop-offs, the confusions, and the people who ghosted after 1 session.

If you’re building an AI tool, don’t just focus on the AI.

Focus on:

- pricing clarity

- upgrade psychology

- emotional feedback loops**

MVP is about minimum, yes. But also about viability.

And viability begins when users know exactly where they are, what they get, and why they should care.

Your turn…

Have you shipped too fast?

What did you learn from early users?

Let’s trade war stories in the comments.